Heterogeneous Computing

In the early stages of computing, systems predominantly featured central processing units (CPUs) designed for running general-purpose programs. However, there has been a paradigm shift in recent years, with computers increasingly incorporating specialized processing units known as accelerators. A heterogeneous computer system is a system that contains different types of processing units or devices, often optimized for specific tasks. The most common scenario involves a combination of CPUs and graphics processing units (GPUs).

In the realm of supercomputers, GPUs assume a predominant role as primary acceleration devices. As of 2023, over a third of the world's top 500 high-performance computing (HPC) systems are heterogeneous setups, integrating GPUs to enhance computing nodes alongside CPUs. The preeminent exascale supercomputer, Frontier, relies heavily on GPUs, constituting virtually all of its computing power.

However, GPUs are not the exclusive accelerators available for offloading CPU workloads. Recently, field-programmable gate array (FPGA) technology has matured to the extent that it rivals GPUs in terms of performance per watt of consumed energy. The latest FPGA chips are equipped with high bandwidth memory (HBM) and connect to the system via a high-speed peripheral component interconnect express (PCIe) bus.

Memory on GPU and FPGA

Here are some key considerations on how FPGAs compare to GPUs regarding memory, a critical factor for HPC applications.

| Device | Type | Memory Type | Memory Size [GB] | Bandwidth max. [GB/s] |

|---|---|---|---|---|

| NVIDIA A100 | GPU | HBM2E | 80 | 2,000 |

| NVIDIA V100 | GPU | HBM2 | 32 | 900 |

| AMD Alveo U55C | FPGA | HBM2 | 16 | 460 |

| AMD Alveo U250 | FPGA | DDR4 | 64 | 77 |

Host and device

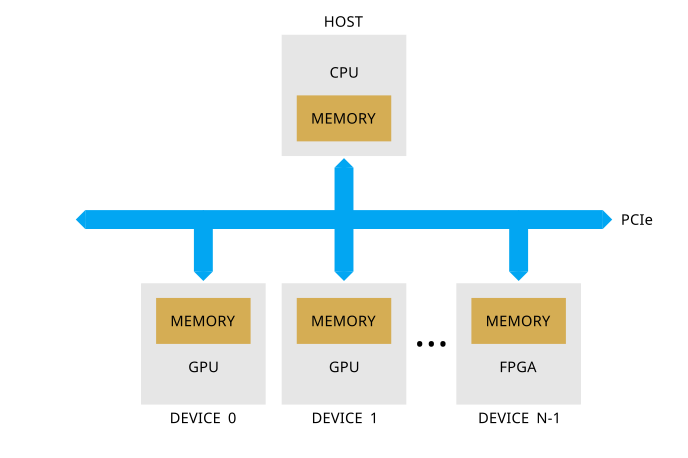

In heterogeneous computing systems, host and device refer to distinct components with different roles and functionalities.

The host is typically the main processing unit in a heterogeneous system and is often a CPU. It manages the overall system, executes control tasks, and coordinates the operation of other devices.

A device refers to specialized processing units such as GPUs, FPGAs, or other accelerators. These devices are designed to excel at specific types of computations, such as graphics rendering, machine learning, or signal processing. Devices are often optimized for parallel processing and can handle multiple tasks concurrently, making them suitable for workloads that can be divided into parallelizable units. The host CPU offloads specific computations to the devices, using their parallel processing capabilities to improve overall system performance.

Devices execute data processing within their designated memory and address space. Importantly, devices and the host cannot directly address each other's memory. Instead, the host interacts with the device memories through unique interfaces, enabling data transfer via the bus that interconnects the device memory and the main memory of the host. In the figure below, we illustrate the general architecture of a heterogeneous computing system.

Device to device communication

Certain technologies allow devices to connect directly without the need for host intercession. For instance, NVIDIA NVLink facilitates high-speed GPU-to-GPU interconnect, while NVIDIA GPUDirect remote direct memory access (RDMA) establishes a direct link between GPUs and other devices via the PCIe bus. Likewise, AMD has introduced the Infinity Fabric connectivity technology, enabling seamless connections among CPUs, GPUs, FPGAs, or other third-party devices.

FPGA Applications and Benefits

FPGA devices are versatile tools with applications across various domains. They have found their place in the high-performance computing ecosystem, accelerating machine learning algorithms, particularly in deep neural network computations. In data analytics, they expedite the analysis of large datasets, enable nanosecond-speed algorithmic trading, and enhance the performance of computational storage systems. In the role of a smart network interface, they further optimize network throughput and bolster cybersecurity measures. FPGAs are also commonly utilized in real-time video and audio transcoding and processing.

Compared to well-established computing platforms such as CPUs and GPUs, FPGA technology offers the following advantages:

-

Application-specific acceleration: FPGAs can be reprogrammed to implement custom hardware accelerators tailored for specific workloads.

-

Dynamic reconfiguration: FPGA can adapt to changing computational requirements during runtime. This feature is beneficial in scenarios where workloads vary over time.

-

Energy efficiency: FPGAs often have lower power consumption compared to GPUs. In scenarios where energy efficiency is crucial, such as data centres, FPGAs can provide a more power-efficient solution.

-

Low latency: Their ability to implement custom hardware reduces processing delays, making FPGAs suitable for real-time processing tasks.

-

Task-level parallelism: FPGAs can exploit parallelism at multiple levels, including task-level parallelism that enables efficient execution of workloads with irregular or dynamic parallelism.

-

Data streaming and memory access: FPGAs can be optimized for memory-intensive tasks with spatial and temporal locality patterns.

In the upcoming section, we will delve deeper into the details of FPGA architecture.

Further reading

When preparing for the workshop, we found the following sources helpful: