Parallel Processing of Video Sequences

There are vast number of problems that can be divided into independent sub-problems, which can be tackled simultaneously. These are referred to as "embarrassingly parallel" problems.

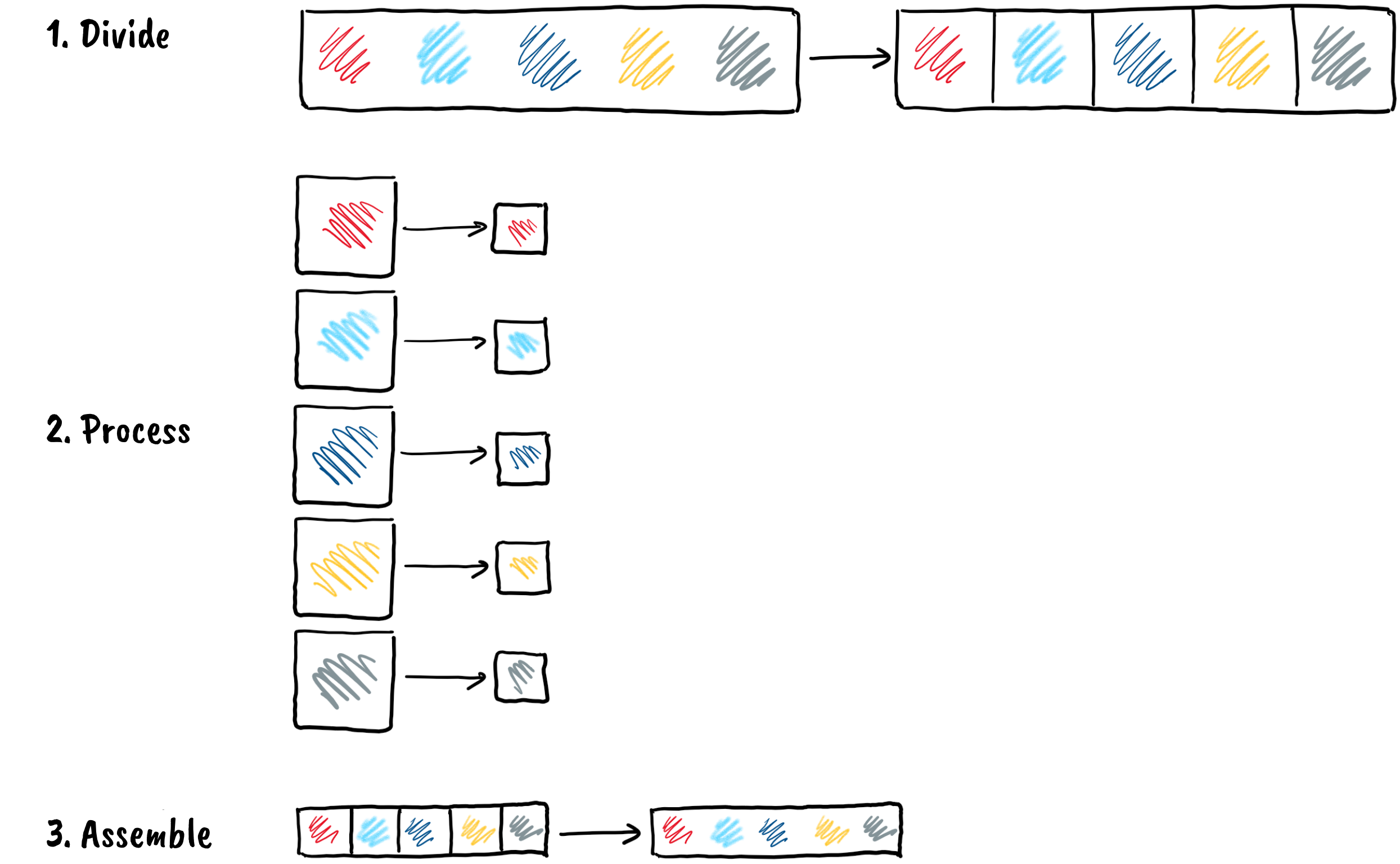

One typical example is video processing. To speed up this process, we can take the following steps:

- first, we divide the video using one core,

- then we process each segment separately on its own core, and

- finally, we assemble the segment processed on one core back into a whole.

This approach has an advantage because each piece is processed in parallel on its own core. By splitting the video into N equal segments during the first step, the processing time in the second step is roughly N fractions of the time it would take to process the entire video on a single core. Even if we factor in the disassembly and reassembly steps, which aren’t present when processing on a single core, we can still achieve a significant improvement.

First, make sure that the video file llama.mp41 is on the cluster.

Step 1: Divide

We want to divide the llama.mp4 video into smaller pieces, which will then be processed in parallel. First, load the appropriate module if you don't already have one.

$ module load FFmpeg

module load FFmpeg

Then we divide the video into 20-second segments using the following command:

$ srun --ntasks=1 ffmpeg \

-y -i llama.mp4 -codec copy -f segment -segment_time 20 \

-segment_list parts.txt part-%d.mp4

srun --ntasks=1 ffmpeg -y -i llama.mp4 -codec copy -f segment -segment_time 20 -segment_list parts.txt part-%d.mp4

where

-codec copythe audio and video record are copied exactly as they are to the output files.-f segmentselects segmentation option, which splits the input file,-segment_time 20gives the desired duration of each chunk in seconds,-segment_list parts.txtstores the list of generated parts in theparts.txtfile,part-%d.mp4gives the name of the output files, where%dis a placeholder thatffmpegreplaces with the sequence number of the chunk during the chunking process.

Once the process is complete, the working directory will contain the original video, the list of segments in parts.txt, and the segments labeled part-0.mp4 to part-4.mp4.

$ ls

llama.mp4 part-0.mp4 part-1.mp4 part-2.mp4 part-3.mp4 part-4.mp4 parts.txt

ls

Step 2: Process

We process each segment in the same way. When we want to change the resolution of the segment part-0.mp4 to 640×360 and save the result to the file out-part-0.mp4, we use the following command:

$ srun --ntasks=1 ffmpeg \

-y -i part-0.mp4 -codec:a copy -filter:v scale=640:360 out-part-0.mp4

srun --ntasks=1 ffmpeg -y -i part-0.mp4 -codec:a copy -filter:v scale=640:360 out-part-0.mp4

To request resources and run our task on the assigned compute node, we typically use the srun command. However, this can be a tedious process if we have many segments to process. Instead, we can use a sbatch script that automates this process for us.

1 2 3 4 5 6 7 8 9 10 11 | |

We save the script as file ffmpeg1.sh, and run it with the following command:

$ sbatch ./ffmpeg1.sh

Submitted batch job 389552

sbatch ./ffmpeg1.sh

We have not yet speeded up the execution because the script waits for each srun call to finish before moving on to the next one. To send all the segments for processing at the same time, add an & character to the end of each srun line to run the command in the background. This will allow the script to immediately resume execution of the next command without waiting for the previous one to finish. However, at the end of the script, the wait command must be added to ensure that all the tasks running in the background are completed before the script terminates. This ensures that all the video segments are processed to completion.

Each call to srun represents one task in our transaction. Therefore, we specify the number of tasks to be executed simultaneously in the script header using --ntasks=5. This ensures that Slurm executes a maximum of five tasks at a time, even if there are more. To prevent Slurm from repeating the task five times for each chunk, which is not helpful, we add --ntasks=1 and --exclusive to each srun call.

1 2 3 4 5 6 7 8 9 10 11 12 13 | |

Array of jobs

The method mentioned above is functional, but it is not very user-friendly. If we modify the number of pieces, we need to modify or add lines in the script, which can result in errors. Additionally, we can notice that the steps are almost identical except for the number in the file names. Luckily, Slurm offers a solution for this scenario called array jobs. Let's take a look at how we can user array jobs for the above example.

1 2 3 4 5 6 7 8 9 | |

To run multiple commands in a script using Slurm, we have added the --array=0-4 option. This command instructs Slurm to execute the script for each number within the range of 0 to 4. The number of tasks run by Slurm is determined by the range specified by the --array option, which in this case is 5. If we want to limit the number of tasks running simultaneously to 3, for instance, we can write --array=0-4%3.

We don't need to specify the filename in the command, as Slurm replaces it with the $SLURM_ARRAY_TASK_ID setting for each task. The srun command is executed for each task, and we don't need to specify --ntasks=1. Slurm replaces the $SLURM_ARRAY_TASK_ID setting with one of the numbers from the range specified by the --array switch for each task. Additionally, we've added the % a setting to the log file name, which Slurm also replaces with a number from the range specified by the --array switch. This ensures that each task writes to its own file. The $SLURM_ARRAY_TASK_ID setting is an environment variable that Slurm sets appropriately for each task. When Slurm executes the #SBATCH commands, this variable doesn't exist yet, so we need to use the `% a' setting for switches in Slurm.

Once this step is complete, we'll have the files out-part-0.mp4 to out-part-4.mp4 in the working directory containing the processed segments of the original recording.

Step 3: Assemble

We just need to combine the files out-part-0.mp4, ..., out-part-4.mp4 into a single video. To do this, we need to provide ffmpeg with a list of segments we want to merge. Let's create a file named out-parts.txt with the following content:

1 2 3 4 5 | |

We can create it from an existing list of segments of the original video named parts.txt. First, let's rename the file to out-parts.txt. Open the out-parts.txt file in a text editor and find and replace all occurrences of the string part with the string file out-part.

Alternatively, we can create a list of individual video segments using the command line and the sed program (stream editor):

$ sed 's/part/file out-part/g' < parts.txt > out-parts.txt

sed 's/part/file out-part/g' < parts.txt > out-parts.txt

Finally, we assemble the output video out-llama.mp4 from the list of segments in the out-parts.txt file.

$ srun --ntasks=1 ffmpeg -y -f concat -i out-parts.txt -c copy out-llama.mp4

srun --ntasks=1 ffmpeg -y -f concat -i out-parts.txt -c copy out-llama.mp4

You can remove temporary files with the data transfer tool.

Exercise

Here you will find exercises to strengthen your knowledge of the parallel video processing process on the cluster.

Steps 1, 2, 3 All at Once

In the previous sections, we speeded up the video processing by splitting the task into several pieces, which are executed as several parallel tasks. We ran ffmpeg over each chunk, each task used one kernel and knew nothing about the other segments. This approach can be taken whenever a problem can be split into independent pieces. We do not need to change the processing program.

In principle, each job is limited to one processor core. However, by using threads, a single job can use multiple cores. The ffmpeg program can use threads for many operations. The above three steps done earlier can also be executed with a single command. Now we run the task with a single job for the whole file since the ffmpeg program will itself split the processing into several segments, depending on the number of cores we assign to it.

So far we have done all the steps using the FFmpeg module. This time we use the ffmpeg-alpine.sif container.

If we haven't already, we download the FFmpeg container from the link on the cluster. When using the container, add apptainer exec ffmpeg_alpine.sif before calling ffmpeg. There are now three programs included in the command:

srunsends job to Slurm and runs the programapptainer,- program

apptainerruns containerffmpeg-alpine.sif, and - inside container

ffmpeg-alpine.sifstarts programffmpeg.

$ srun --ntasks=1 --cpus-per-task=5 apptainer exec ffmpeg_alpine.sif ffmpeg \

-y -i llama.mp4 -codec:a copy -filter:v scale=640:360 out123-llama.mp4

srun --ntasks=1 --cpus-per-task=5 apptainer exec ffmpeg_alpine.sif ffmpeg -y -i llama.mp4 -codec:a copy -filter:v scale=640:360 out123-llama.mp4

A process that we executed in three steps before is now done for us by ffmpeg in about the same time. With the --cpus-per-task switch, we have requested that Slurm reserve 5 processor cores for each task in our transaction.

While ffmpeg is running, it prints the status on the last line:

frame= 2160 fps=147 q=-1.0 Lsize= 4286kB time=00:01:30.00 bitrate= 390.1kbits/s speed=6.11x

The speed data tells us that encoding is 6.11 times faster than real-time playback. In other words, if a video is 90 seconds long, it will take 90/6.11 ≈ 14.72 seconds to encode.

Hint

If you had read the documentation well before using ffmpeg, you would have made your work much easier. But then you would not have learnt many very useful ways of using a computer cluster.