CUDA1

So far, we have learned how a GPU is built and what streaming multiprocessors and CUDA cores are. We are familiar with kernel execution, thread and thread block scheduling, and instruction dispatching. Moreover, we now understand the GPU memory hierarchy. Now is the time to look at the CUDA programming model.

CUDA heterogeneous system

CUDA is a parallel computing platform and programming model with a small set of extensions to the C language. The CUDA programming model provides the two essential features for programming the GPU architectures:

- A way to launch a kernel and organize threads on the GPU.

- A way to transfer data between CPU and GPU and access memory on the GPU.

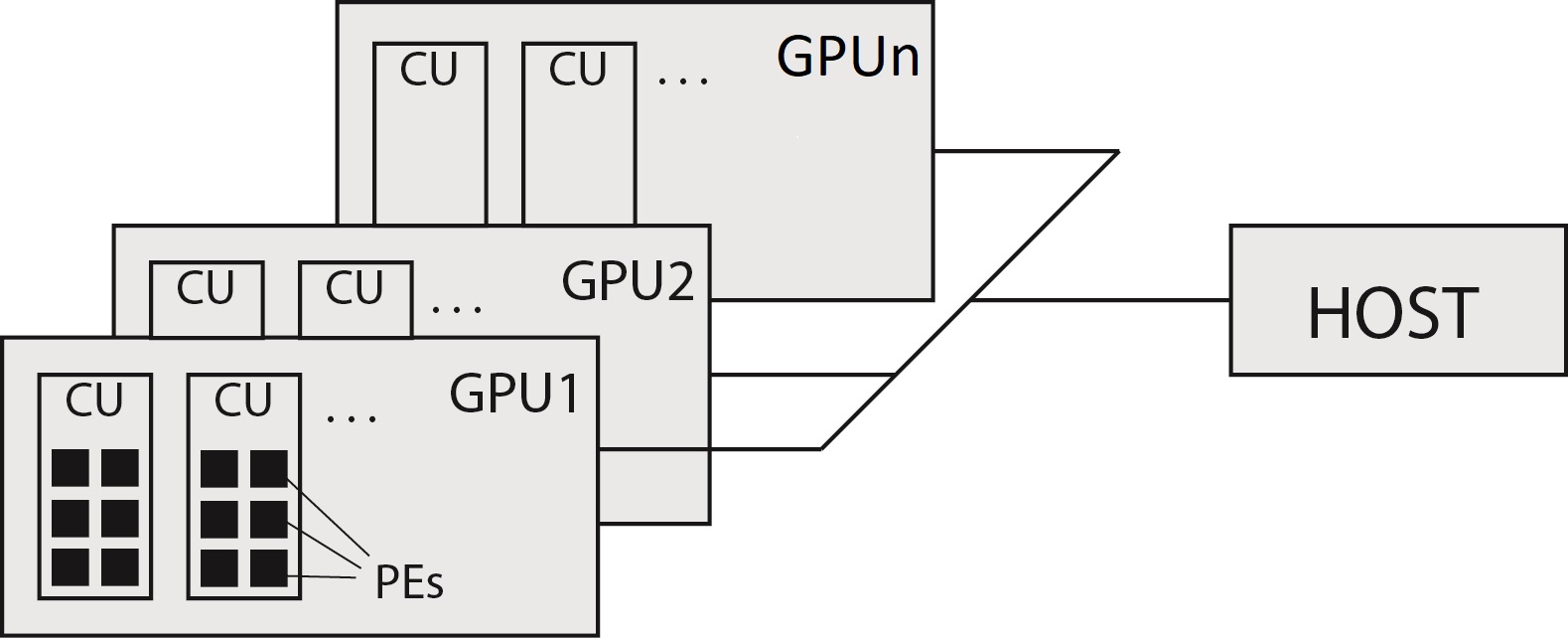

The CUDA programming model enables you to execute applications on heterogeneous computing systems by simply annotating code with a set of extensions to the C programming language. A heterogeneous environment consists of CPUs complemented by GPUs, each with its own memory separated by a PCI-Express bus. A heterogeneous system consists of a single host connected to one or more GPU accelerator devices, each with its own memory separated by a PCI-Express bus. A GPU device is where the CUDA kernels execute. A typical heterogeneous system is shown in the figure below.

Therefore, you should note the following distinction:

- Host — the CPU and its memory (host memory),

- Device — the GPU and its memory (device memory).

A CUDA program consists of the host program that runs on the host (usually, this is a desktop computer with a general-purpose CPU), and one or more kernels that run on GPU devices. Recall that a GPU device comprises several SMs. Each SM consists of tens or hundreds of streaming processors (CUDA cores).

CUDA Thread Execution Model

We are already familiar with the GPU execution model (consisting of SMs, execution blocks, and warp scheduler) that executes and schedules thread blocks, threads and warps. Now is the time to learn its programming counterpart. The most important concept to understand is the CUDA thread execution model that defines how kernels execute.

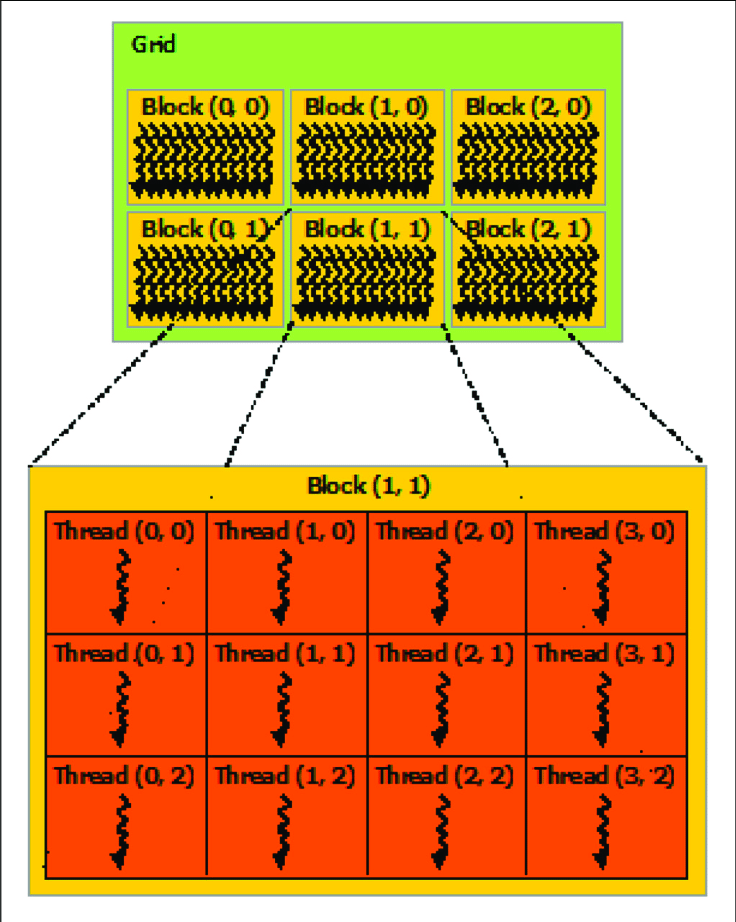

A key component of the CUDA programming model is the kernel — the code that runs on the GPU device. As programmers, we write a kernel as a sequential program. Behind the scenes, CUDA schedules programmer-written kernels on GPU threads, i.e., when a kernel function is launched from the host, execution is moved to a device where a large number of threads are generated and each thread executes the same statements specified by the kernel. Knowing how to organize threads is a critical part of CUDA programming. CUDA exposes a thread hierarchy abstraction to enable you to organize your threads. This is a three-level thread hierarchy decomposed into threads, blocks of threads and grids of blocks, as shown in the figure below.

In other words, each kernel function is executed in a grid of threads. This grid is divided into thread blocks, and each block is further divided into threads. Furthermore, each thread executes the same kernel. The latter means that the kernel is executed in a huge number of instances — each thread executes the code contained in the kernel, but each kernel operates on its own data.

The key concepts to remember are:

-

Grid — All threads spawned by a single kernel launch are collectively called a grid. A kernel (GPU function) is launched as a collection of thread blocks called a grid. A grid is composed of thread blocks. All threads in a grid share the same global memory space. Grid size is defined using the number of blocks. For example grid of size 16 contains 16 thread blocks. If the grid is 1D, all 16 blocks are in one dimension (e.g., 1x6). If the grid is 2D, 6 blocks are placed in two dimensions (e.g., 3x2)

-

Thread Block — A grid is made up of many thread blocks. A thread block is a collection of threads that can cooperate with each other using

- Block-local synchronization and

- Block-local shared memory.

Threads from different blocks cannot cooperate. A thread block executes on an SM, i.e., all threads from a block execute on the same SM.

-

Thread — Single execution unit that runs GPU function (kernel) on GPU. In other words, a thread is a single instance of the kernel executed on a GPU device.

From the host, you define how your algorithm is mapped to the grid of threads and thread blocks based on application data and GPU device capability. The goal is to enable you to focus on the logic of your algorithm in a straightforward fashion (by writing sequential code) and not get bogged down with details of creating and managing thousands of GPU threads. For example, suppose your data-parallel code operates on the individual pixels in an image. In that case, your kernel will contain the code to operate on a single pixel. You will then launch a 2D grid of threads, where the dimensions of the grid and the number of threads in the grid correspond to the image size. Each thread from the grid will operate on the corresponding pixel in the image.

The CUDA programming model is primarily asynchronous. When a kernel has been launched, control is returned immediately to the host, freeing the CPU to perform additional tasks. Also, the GPU computation performed on the GPU can be overlapped with host-device communication.

CUDA thread indexing

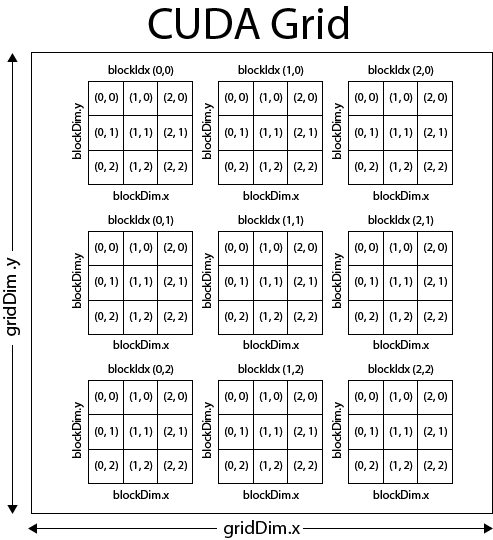

Threads rely on the following two unique coordinates to distinguish themselves from each other:

blockIdx(block index within a grid) andthreadIdx(thread index within a block).

These variables are built-in, pre-initialized variables that can be accessed within kernel functions. When a kernel function is executed, the coordinate variables blockIdx and threadIdx are assigned to each thread by the CUDA runtime. Based on the coordinates, you can assign portions of

data to different threads.

The coordinate variables blockIdx and threadIdx are of type uint3, a CUDA built-in integer vector type. It is a structure containing three unsigned integers. The 1st, 2nd, and 3rd components

are accessible through the fields x, y, and z, respectively. For example

blockIdx.x, blockIdx.y and blockIdx.z are three components of blockIdx, whereas threadIdx.x, threadIdx.y and threadIdx.z are three components of threadIdx.

The dimensions of a grid and a block are specified by the following two built-in variables:

blockDim(block dimension, measured in threads) andgridDim(grid dimension, measured in blocks).

These variables are of type dim3, a CUDA built-in integer vector type used to specify dimensions. Each component in a variable of type dim3 is accessible through its x, y, and z fields, respectively, as blockDim.x, blockDim.y and blockDim.z.

When defining a variable of type dim3, any component left unspecified is initialized to 1.

The figure above shows an example of a thread hierarchy structure with a 2D grid containing 2D blocks and their dimensions.

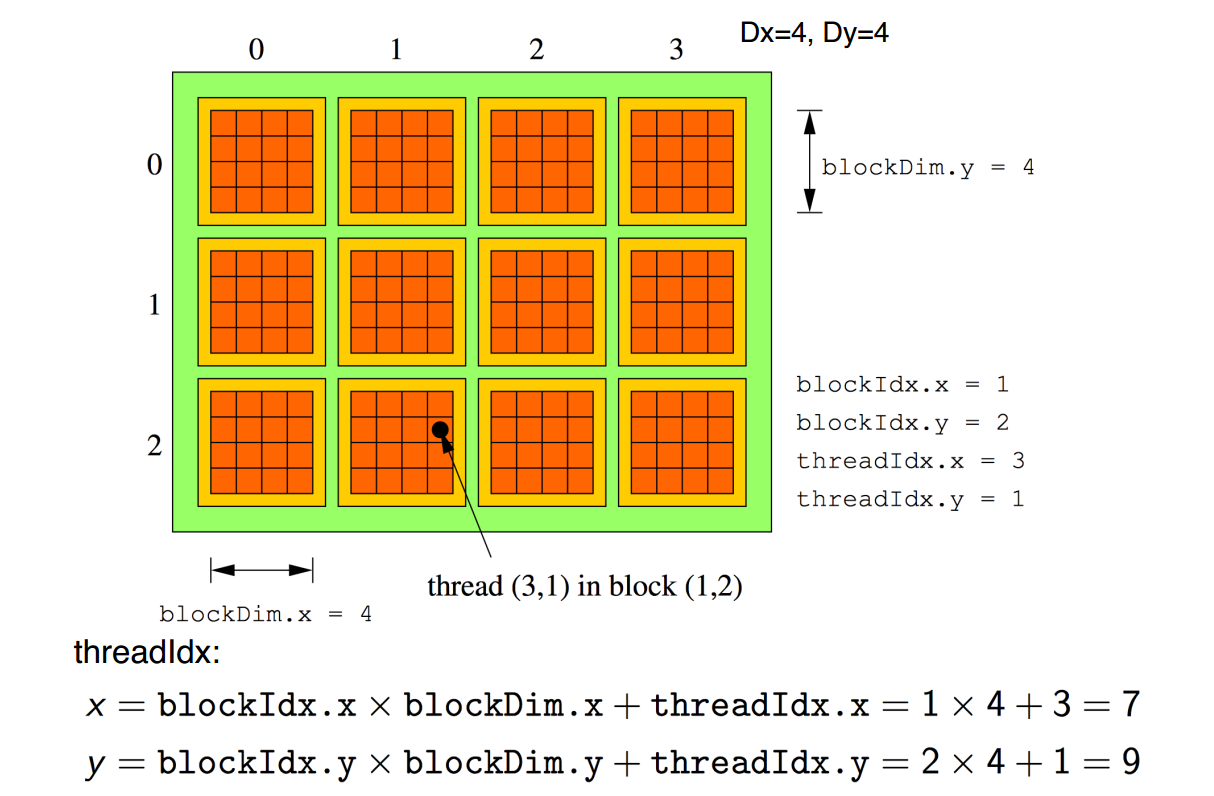

In the image below, we see that this example grid is divided into 12 thread blocks (3×4), each consisting of 16 threads (4×4) for a total of 192 threads for the kernel grid. This image only shows a 2-dimensional grid, but the grid of thread blocks can actually be partitioned into 1, 2 or 3 dimensions. Similarly, thread blocks can be partitioned into individual threads into 1, 2 or 3 dimensions (depending on the Compute Capability of a GPU device). The maximum number of threads that can be assigned to a thread block is defined by the compute capability. Now we can head into the thread indexing in order to figure out the global (unique) thread index in the grid and to map a problem space into a grid. We have to do thread indexing using the above-explained variables. By thread indexing, we get a unique ID for each thread and block in a grid. See the computation of variables x and y in the figure below.

CUDA memory model

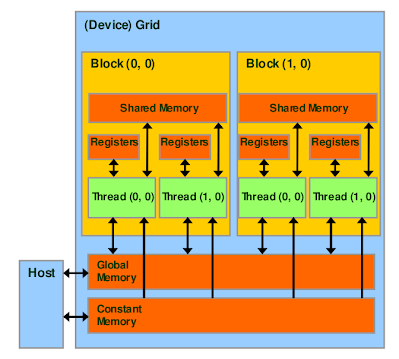

We have already learnt that the CUDA programming model assumes a system composed of a host and a device, each with its own separate memory: host memory and device memory. Kernel functions are executed from the device memory space. The CUDA runtime provides functions to allocate device memory, release device memory, and transfer data between the host and device memory. Also, the CUDA programming model exposes an abstraction of memory hierarchy from the GPU architecture, which is presented in the figure below.

At the bottom of the figure, we see global memory and constant memory. These are the memories that the host (the CPU) can write to and read from. All the threads in a grid can access the global memory, but the global memory has the largest access latency. The constant memory allows read-only access by the device. It provides faster and more parallel data access paths than global memory. Each thread has its own set of registers, and the threads from a thread block share the shared memory, which is private to the thread block. The registers and shared memory can be accessed at a very high speed in a highly parallel manner. However, the small size of these memories poses a problem for large data. A kernel function typically uses registers to hold frequently accessed variables private to each thread. All threads in a block can access variables in the shared memory locations allocated to the block. Shared memories are efficient means for threads to cooperate by sharing the results of their work.

Global Memory Management

The function used to perform GPU memory allocation is cudaMalloc, and its function signature is:

cudaError_t cudaMalloc ( void** devPtr, size_t count )

This function allocates count bytes of global memory on the device. It returns the location of that memory in pointer devPtr. Never try to reference this memory from the host using this pointer! The memory spaces on the host and device are separated. They are not mutually directly accessible. The values contained in the allocated global

memory are not cleared. You are responsible for filling the allocated global memory with data transferred from the host.

Once an application is no longer using allocated global memory, it can be freed using:

cudaError_t cudaFree(void *devPtr)

This function frees the global memory pointed to by devPtr, which has been previously allocated using the cudaMalloc function.

Device memory allocation and deallocation are expensive operations, so applications should reuse device memory whenever possible to minimize the impact on overall performance.

Global Memory Transfers

Once global memory is allocated, you can transfer data to the device from the host. The function used to transfer data between the host and device is:

cudaError_t cudaMemcpy ( void* dst, const void* src, size_t count, cudaMemcpyKind kind )

This function copies count bytes from the memory location src to the memory location dst, with the direction specified by kind, where kind takes one of the following types:

cudaMemcpyHostToHost,cudaMemcpyHostToDevice,cudaMemcpyDeviceToHost,cudaMemcpyDeviceToDevice.

This function exhibits synchronous behaviour because the host application blocks until cudaMemcpy returns and the transfer is complete.

Shared Memory

Physically, each SM contains a small low-latency memory shared by all threads in the thread block currently executing on that SM. Shared memory enables threads within the same thread block to cooperate and can greatly reduce the global memory bandwidth needed by kernels. When a thread block starts executing, a fixed amount of shared memory is allocated to that thread block. Its contents have the same lifetime as the thread block in which it was created. Shared memory accesses are issued per warp. Because the application explicitly manages the contents of shared memory, it is often described as a program-managed cache. You have full control over when data is moved into shared memory and when data is evicted.

A shared memory variable is declared with the __shared__ qualifier. The following code segment statically declares a shared memory 2D integer array.

__shared__ int matrix[size_y][size_x];

If declared inside a kernel function, the scope of this variable is local to the kernel. If declared outside of any kernels in a file, the scope of this variable is global to all kernels.

CUDA Programming model

Until 2006, graphic cards were very difficult to use because programmers had to use graphic Application Programming Interface (API), like OpenGL, to program these chips. These APIs are meant for graphical programming (like in video games) and are not particularly adequate for general-purpose and scientific computations. But everything changed in 2007 with the release of CUDA. The API C for CUDA is an extension of the C standard, which enables GPUs to draw all their computing power out of them. A CUDA program consists of one or more phases that are executed on either the host (CPU) or the device (GPU). The problem parts that exhibit little or no data parallelism are implemented in the host code. On the contrary, the parts of the problem that exhibit a rich amount of data parallelism are implemented in the device code. The program supplies a single source code encompassing host and device code. The NVIDIA C Compiler (NVCC) separates the two. The host code is straight ANSI C code and is compiled with the host's standard C compilers and runs as an ordinary process. The device code is written using ANSI C extended with keywords for labelling data-parallel functions and invoking kernels with their associated data structures. The device code is typically further compiled by the NVCC and executed on a GPU device. A typical execution of a CUDA program follows these steps:

- Copy data from CPU memory to GPU memory.

- Invoke kernels to operate on the data stored in GPU memory.

- Copy data back from GPU memory to CPU memory.

Threads and kernels

Let us now have a look at the CUDA kernel functions and the organizations of threads generated by the invocation of kernel functions. In CUDA, a kernel function specifies the code to be executed by all threads on the CUDA device. Since all threads execute the same code, the focus of the CUDA programming is on the logic of your algorithm in a straightforward fashion (by writing sequential code) and not getting bogged down with details of creating and managing thousands of GPU threads. This style of programming is well-known as Single Program Multiple Data.

We define a kernel using a CUDA-specific keyword __global__ in front of the declaration of a function. This keyword indicates that the function is a kernel and that it can be called from host functions to generate a grid of threads. A kernel function must return a void. An example of a kernel function is:

1 2 3 4 5 | |

When a kernel is invoked, it is executed as a grid of parallel threads. Threads in a grid are organized into a two-level hierarchy. At the top level, each grid consists of one or more thread blocks. And each block in a grid must have the same number of threads organized in the same manner. To call a kernel, we have to specify the characteristics of the grid and the blocks:

1 2 | |

grid_size and block_size in the previous call are of type dim3 and are declared and initialized with the following code:

1 2 3 | |

Besides kernels that are functions called from a host and executed on a device, we can also write other types of functions in CUDA. The possible function type qualifiers used in CUDA C programming are:

__global__: functions are executed on the device and callable from the host. For devices with compute capability equal to or larger than 3, such functions are callable also from the device. Such functions must have avoidreturn type.__device__: functions execute on the device and are callable from the device only.__host__: functions execute on the host and are callable from the host only.

We can use __device__ and __host__ qualifiers together. In that case, the function is compiled for both the host and the device.

-

© Patricio Bulić, University of Ljubljana, Faculty of Computer and Information Science. The material is published under license CC BY-NC-SA 4.0. ↩