Jobs and Tasks in the Slurm System

Computer cluster users primarily interact with middleware for job control called SLURM (Simple Linux Utility for Resource Management). Slurm manages job queues, allocates the necessary resources to jobs, and monitors their execution. By utilizing the Slurm system, users can secure access to computational resources (compute nodes) for a specified period, submit jobs to be executed on those resources, and monitor their progress throughout the execution.

Running Jobs through Slurm

To execute user programs on the compute nodes, we utilize the capabilities provided by the Slurm system. In order to do so, we prepare a job submission that includes the following specifications:

- required programs and files needed for execution,

- invocation method of the program,

- computational resources needed for execution,

- time constraints for job execution, and other parameters.

A job that runs simultaneously on multiple cores is typically divided into tasks.

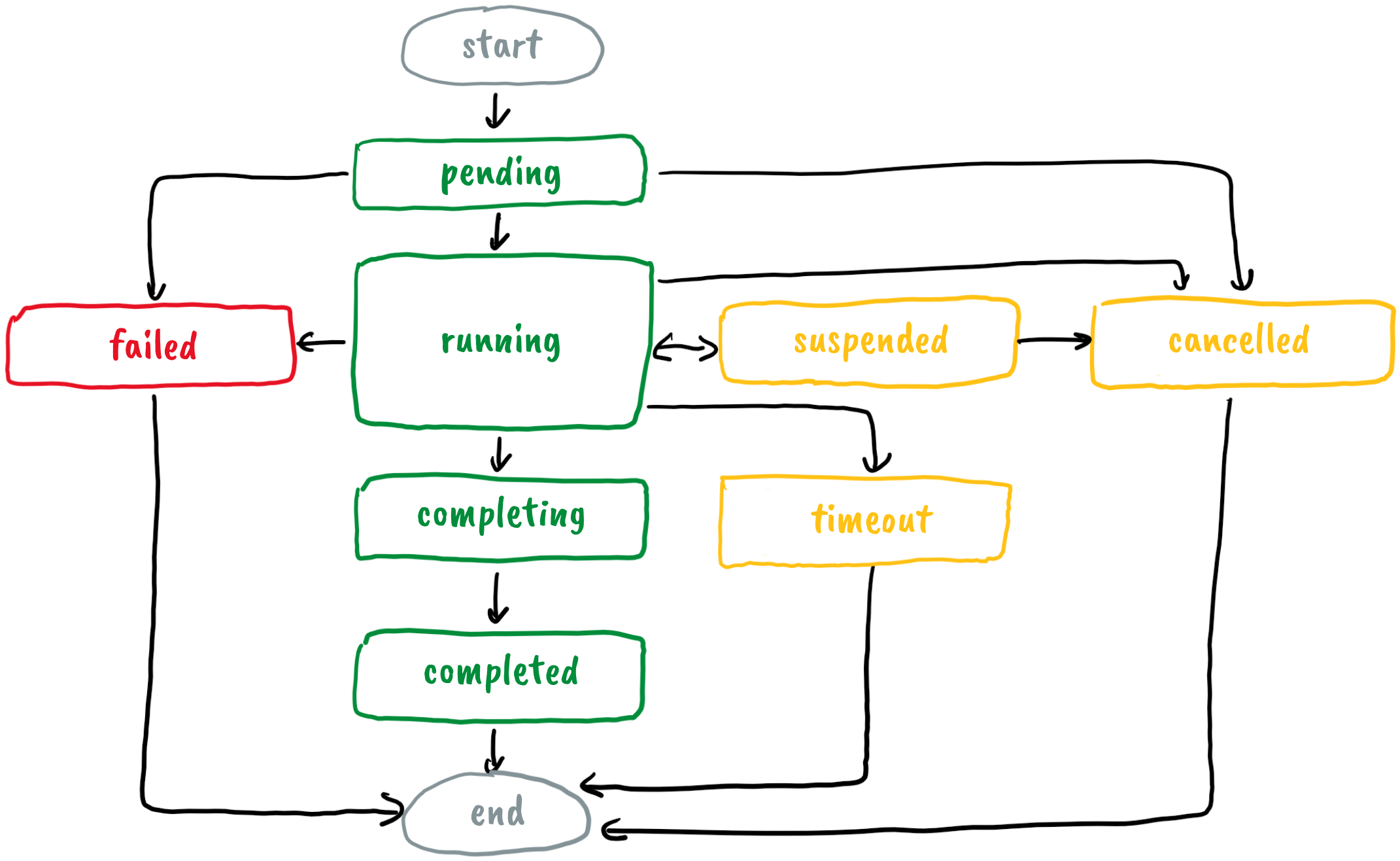

Lifecycle of a Job

Once a job is prepared, it is submitted to the job queue. At this stage, the Slurm system assigns it a unique identifier (JOBID) and places it in a pending state. Slurm selects jobs from the queue based on available computing resources, estimated execution time, and priority settings.

When the required resources become available, the job transitions into the running state, indicating that it is actively being executed. After completion, the job moves through the completion phase, during which Slurm waits for any remaining tasks or nodes to finish, before finally reaching the completed state.

If needed, the execution of a job can be temporarily suspended or permanently canceled. A job may also encounter errors during execution, leading to a failed state. Additionally, Slurm can terminate a job if it exceeds the specified time limit (timeout).

Understanding the lifecycle of a job helps users monitor their job's progress and manage their computational tasks effectively.