Cluster Software

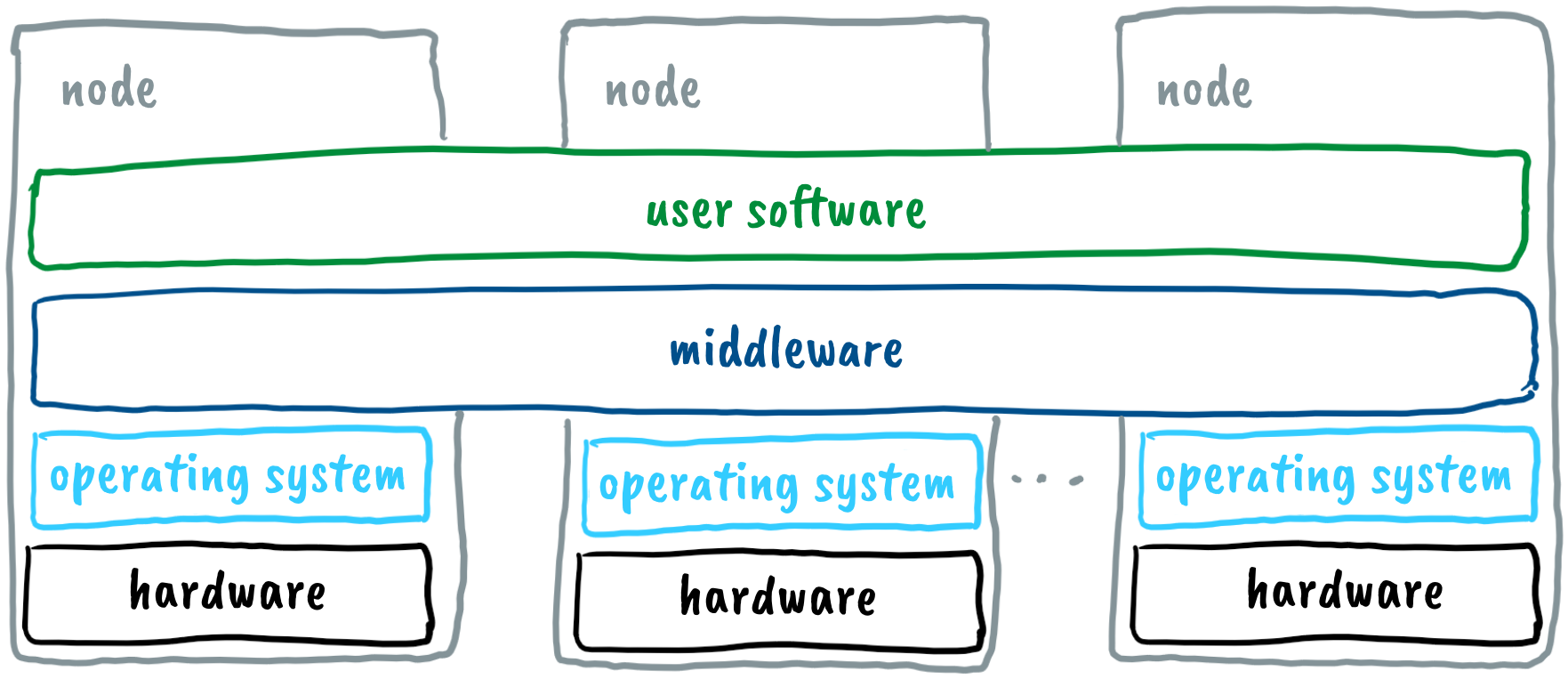

The software of a computer cluster consists of the following:

- operating system,

- middleware, and

- user software (applications).

Operating System

The operating system serves as a bridge between user software and computer hardware. It is software that performs basic tasks such as memory management, processor management, device control, file system management, security functions, system operation control, resource usage monitoring, and error detection. Popular operating systems include freely available Linux, commercial macOS, and Windows. The nodes of the Arnes, NSC, Maister, and Trdina clusters are equipped with the AlmaLinux OS, while the nodes of the Vega cluster run on Red Hat Enterprise Linux.

Middleware

Middleware is software that connects the operating system and user applications within clusters. In a computer cluster, it ensures the coordinated operation of multiple nodes, enables centralized node management, handles user authentication, and controls job execution (user applications) on the nodes, among other things.

Cluster users primarily work with middleware for job control, and a widely used middleware system is Slurm (Simple Linux utility for resource management). The Slurm system manages a job queue, assigns required resources to jobs, and monitors job execution. Users utilize the Slurm system to access resources (compute nodes) for a specified period, submit jobs, and monitor their execution.

User Software

User software is essential for users to utilize computers regularly and in computer clusters. With user software, users perform desired functions. Only user software adapted for the Linux operating system can be used in clusters. Some examples of user software commonly used in clusters are Gromacs for molecular dynamics simulations, OpenFOAM for fluid flow simulations, Athena software for analysis of collisions at the LHC collider in CERN (ATLAS), and TensorFlow for deep learning in artificial intelligence. We will use the FFmpeg tool for video processing during the workshop.

The user software can be installed on clusters in various ways:

- The administrator installs it directly on the nodes.

- The administrator prepares environmental modules.

- The administrator prepares containers for general use.

- The user installs it in their directory.

- The user prepares a container in their directory.

Administrators install user software in the form of environmental modules or, preferably, as containers for easier system maintenance.

Environmental Modules

Upon logging into the cluster, users find themselves in a command-line interface with default environment settings. To facilitate their work, they can supplement this environment using environmental modules. Environmental modules are tools for modifying command-line settings and enable users to switch environments easily during work.

Each environmental module file contains the necessary information to configure the command-line interface for specific software. When loading an environmental module, environment variables are adjusted for executing the selected user software. One such variable is PATH, which specifies the directories where the operating system searches for programs.

Environmental modules are installed and updated by the cluster administrator. By preparing environmental modules for software, the administrator simplifies maintenance, avoiding issues related to using different library versions, among other things. Users can load and unload available modules during their work.

Virtualization and Containers

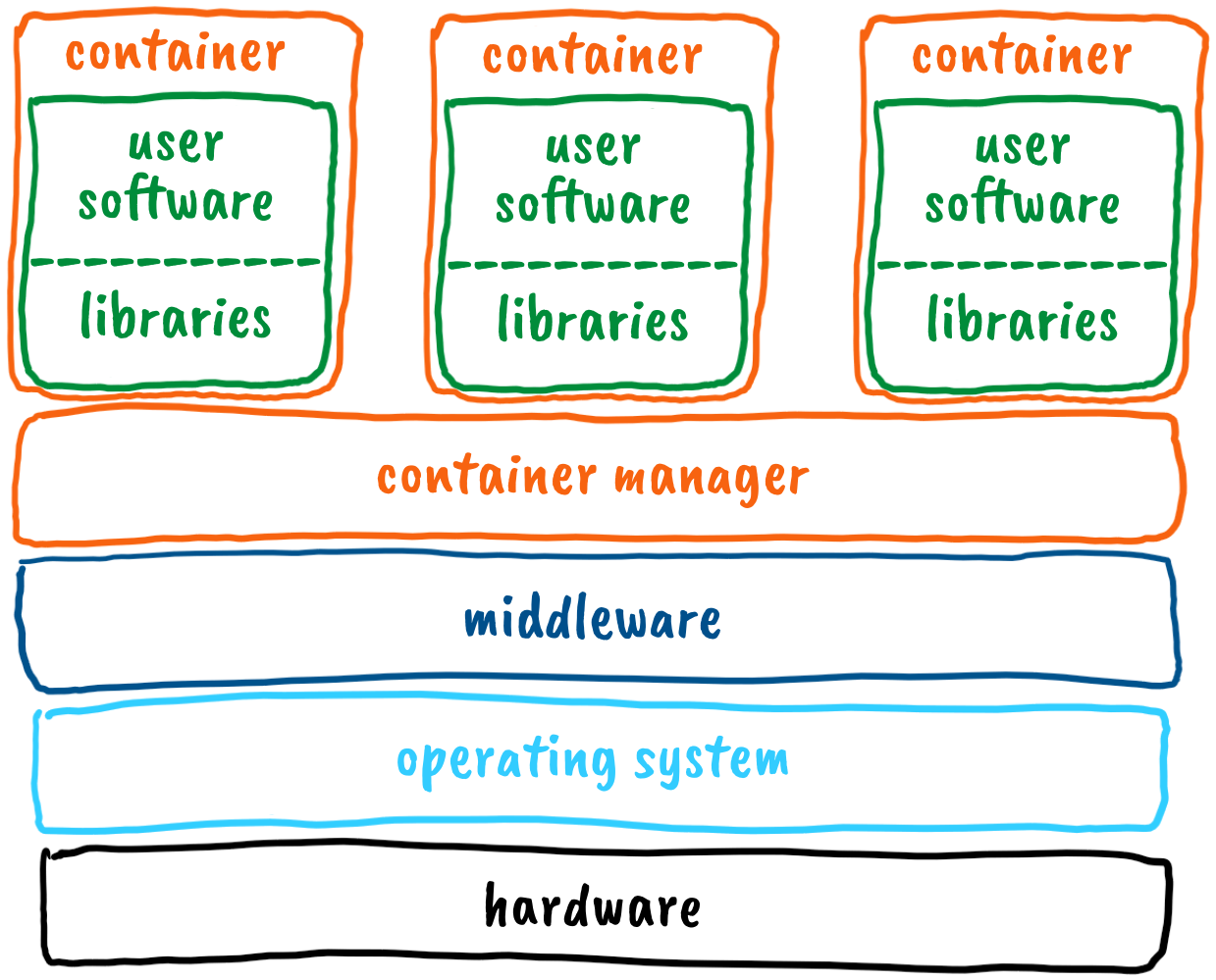

As we have seen, we have many processor cores on the nodes that can simultaneously run various user applications. When directly installing user applications on the operating system, compatibility issues can arise, most commonly due to incompatible library versions.

Virtualizing the nodes is an elegant solution that ensures the coexistence of diverse user applications and, therefore, more straightforward system management. The system's performance is slightly reduced due to virtualization, but it has increased robustness and ease of maintenance. We distinguish between hardware virtualization and operating system virtualization. In the former case, we refer to virtual machines; in the latter, we refer to containers.

For supercomputer clusters, container-based virtualization is more suitable. Containers do not include an operating system, making them smaller, and the container manager can switch between them more easily. The container manager ensures the containers are isolated while providing each container access to a shared operating system and basic libraries. Only the necessary user software and additional libraries are installed separately in each container. The image below illustrates node virtualization with containers.

Cluster administrators encourage users to utilize containers as much as possible because:

- Users can create their containers (we have permission to install software within containers).

- Containers offer various customization options for the operating system, tools, and user software.

- Containers ensure the reproducibility of jobs (consistent results after operating system upgrades).

- Administrators can better control resource consumption through containers.

The most widely used container platform is Docker. On the mentioned clusters, the Apptainer platform is installed for working with containers, which is more adapted to supercomputing environments. Within the Apptainer container environment, users can work with the same user account as on the operating system, with network and data access. The Apptainer container system can launch Docker containers and others.