SLURM: Use and good practices

Access through the login node and the use of the SLURM system enable working on clusters, fast development and exact monitoring of execution.

This type of public access is currently (2020-02) supported in SLING by clusters Maister (University of Maribor, project HPC RIVR), Trdina (Faculty of information studies, project HPC RIVR) and NSC (Institute "Jožef Stefan").

Arranging access: login node

To use clusters via the SLING system, the user must gain access to the login node. The login node is a computer that is directly connected to the system and correctly configured to communicate with the control daemon and has command-line tools installed.

See Instructions > Acquiring access.

Useful commands

- sbatch, salloc, srun: start a job

- scancel: cancel a job in a queue or in progress

- smd: change the allocation of a job, e.g. in the case of hardware error

- sinfo: information on system status

- squeue, sprio, sjstat: information on the status of tasks and types

- sacct, sreport: information on jobs in progress and finished jobs and tasks, generating reports

- sview, smap: graphical representation of the status of the system, jobs, and network typology

- sattach: attaching to a session of a task/job in progress

- sbcast, sgather: transferring files from work nodes

- scontrol: administration, monitoring, and configuring the cluster

- sacctmgr managing the database, cluster data, users, accounts and partitions

All of these SLURM functions are implemented by the documented API, so they can be called automatically.

Clusters and resources

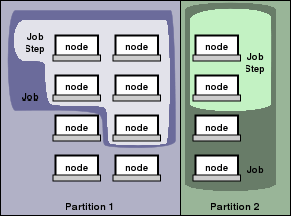

SLURM understands resources in a cluster as nodes, which are a unit of a computing capacity, partitions, which are logical units of nodes, jobs or allocations, which are a set of allocated resources to a user for a specific amount of time, and job steps, which are individual tasks, consecutive or parallel, as they are executed in the scope of an allocation or job.

Partitions are logical units, which are, at the same time, queues for running tasks, but also units, where you can set parameters and restrictions, such as: * size restrictions of the allocation/job/task/step * time restrictions of the execution * permissions and priorities for users and groups * rules for managing priorities * accessibility of resources in partitions (nodes, processors, memory, vector units GPGPU, etc.)

Sending tasks through the SLURM system

Use of three commands:

- sbatch is used to run executable files that will be executed when it's their turn. An executable file can contain multiple

sruncommands to run jobs. When submitting a job throughsbatch, you get the ID/number of the task in the queue. - salloc is used to allocate resources for processing a job in real time.

It is usually used to create a shell, which is then used to start a job withsrun. - srun is used to run tasks in real time.

The job can include multiple tasks or steps, which can be executed consecutively or in parallel on independent or shared resources in allocation of a job to the node.

Usually, srun is used in combination with sbatch or salloc, meaning in the scope of the existing allocation (of selected resources).

If you are already familiar with the queues management systems, you can compare the SLURM system with other queues managers that include analogue commands, concepts, and variables: https://slurm.schedmd.com/rosetta.pdf

srun – running parallel jobs with the SLURM system

SLURM srun is often equated with mpirun MPI-type jobs. If these are not parallel tasks of this type, it is better to use sbatch. An example of use, where the program hostname is used as an application, which only displays the name of the work node (computer), where the process is running:

- Example 1:

srun hostname - Example 2:

srun -N 2 hostname - Example 3:

srun -n 2 hostname - Example 4:

srun -N 2 -n 2 hostname - Example 5:

srun -N 2 -n 4 hostname-Nor--nodes: number of nodes-nor--ntasks: numebr of tasks in the job

Good practice:

Use-nor-Nwith the--ntasks-per-nodeswitch. Add an executable bit to your scripts:chmod a+x my_script.sh

sbatch – sending executable files with the SLURM system

Using sbatch:

The required resources and other parameters for the execution of the job (selection

of the queue/partition, duration of the task, determining the output file, etc.)

can be determined with the SBATCH parameters specified at the start of our

executable file, right after #!/bin/bash.

#!/bin/bash

#SBATCH --job-name=test

#SBATCH --output=result.txt

#SBATCH --ntasks=1

#SBATCH --time=10:00

#SBATCH --mem-per-cpu=100

srun hostname

srun sleep 60 `

Good practice:

Add an executable bit to sbatch scripts:chmod a+x test-script.sh

Sending a task: sbatch test-script.sh

salloc – allocating resources

Using salloc:

sallocrequests allocation of resources for your job and starts a shell at the first node that corresponds to the requirements of your job- you specify the resources in the same way as

srunorsbatch - once the job is completed, the allocated resources are made available

Example:

salloc --partition=grid --nodes=2 --ntasks-per-node=8 --time=01:00:00

module load mpi

srun ./hellompi

exit

Examples of srun/sbatch/salloc

--ntasks-per-node: number of tasks on individual nodes-cor--cpus-per-task: number of cores for individual jobs--time: time to run the job (walltime) (format: dd-hh:mm:ss)--output: stdout to a specific file--job-name: name of the job--mem: total memory for performing the job (format: 4G)--mem-per-cpu: cache/core--cpu-bind=[quiet,verbose]: includes tasks according to the CPU

For more options:

Useman srun/sbatchorsrun/sbatch --help.

The difference between srun and sbatch

- both commands are performed with the same switches

sbatchis the only command that knows the job sets with the same input data ( array jobs)- srun is the only one to know the possibility of performing the job

--exclusive, which enables the allocation of the entire node and thus the execution of several parallel tasks within one resource allocation (from SLURM v20.02 including additionalgresresources, e.g. GPU).

Example of a job with sbatch

sbatch --partition=grid --job-name=test --mem=4G --time=5-0:0 --output=test.log myscript.sh

is the same as:

sbatch -p grid -J test --mem=4G -t 5-0:0 -o test.log myscript.sh

and the same as:

#!/bin/bash

#SBATCH --partition=gridlong

#SBATCH --job-name=test

#SBATCH --mem=4G

#SBATCH --time=5-0:0

#SBATCH --output=test.log

sh myscript.sh`

Job management

Basic overview of the type

How to check the status of nodes and type?

squeue -ldetails of jobs in a queue (-l = long)squeue -u $USERjobs of the user $USERsqueue -p gridlongtasks in the gridlong queuesqueue -t PDjobs waiting in queuesqueue -j <jobid> --startestimated start time of the jobsinfostatus of the queue/partition,sinfo -l -Ndetailed informationsinfo -Tinformation displayscontrol show nodesdata on nodes

Detailed overview of all jobs in the queue: squeue -l:

JOBID PARTITION NAME USER STATE TIME TIME_LIMIT NODES NODELIST(REASON)

948700 gridlong <jobname> <user> PENDING 0:00 22:03:00 1 (Priority)

932891 gridlong <jobname> <user> RUNNING 3-16:43:04 7-00:00:00 1 nsc-gsv005

933253 gridlong <jobname> <user> RUNNING 3-15:24:53 7-00:00:00 1 nsc-gsv004

933568 gridlong <jobname> <user> RUNNING 3-14:19:30 7-00:00:00 1 nsc-msv003

934391 gridlong <jobname> <user> RUNNING 3-10:27:51 7-00:00:00 1 nsc-gsv001

934464 gridlong <jobname> <user> RUNNING 3-08:41:19 7-00:00:00 1 nsc-msv005

934635 gridlong <jobname> <user> RUNNING 3-01:48:24 7-00:00:00 1 nsc-msv005

934636 gridlong <jobname> <user> RUNNING 3-01:48:07 7-00:00:00 1 nsc-msv003

Job overview of a user: squeue -u $USER:

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

948702 gridlong <jobname> <user> R 0:03 1 nsc-gsv003

Display of estimated time from beginning to end of running a job: squeue -j <jobid> --start:

JOBID PARTITION NAME USER ST START_TIME NODES SCHEDNODES NODELIST(REASON)

948555 gridlong <jobname> <user> PD N/A 1 (null) (Priority)

Overview of nodes data: sinfo – example on the Maister cluster:

PARTITION AVAIL TIMELIMIT NODES STATE NODELIST

grid* up 2-00:00:00 3 resv gpu[01-02,04]

grid* up 2-00:00:00 30 mix cn[01-17,20-27,34,41-45]

grid* up 2-00:00:00 4 alloc cn[46-48],gpu[03,05-06]

grid* up 2-00:00:00 45 idle cn[18-19,28-33,35-40],dpcn[01-28]

long up 14-00:00:0 4 mix cn[42-45]

long up 14-00:00:0 4 alloc cn[46-48]

Detailed overview of all nodes: scontrol show nodes. The example shows one node.

NodeName=nsc-msv020 Arch=x86_64 CoresPerSocket=16

CPUAlloc=20 CPUTot=64 CPULoad=21.24

AvailableFeatures=AMD

ActiveFeatures=AMD

Gres=(null)

NodeAddr=nsc-msv020 NodeHostName=nsc-msv020 Version=20.02.0

OS=Linux 5.0.9-301.fc30.x86_64 #1 SMP Tue Apr 23 23:57:35 UTC 2019

RealMemory=257920 AllocMem=254000 FreeMem=56628 Sockets=4 Boards=1

State=MIXED ThreadsPerCore=1 TmpDisk=0 Weight=1 Owner=N/A MCS_label=N/A

Partitions=gridlong

BootTime=2020-06-20T15:16:22 SlurmdStartTime=2020-06-20T16:26:53

CfgTRES=cpu=64,mem=257920M,billing=64

AllocTRES=cpu=20,mem=254000M

CapWatts=n/a

CurrentWatts=0 AveWatts=0

ExtSensorsJoules=n/s ExtSensorsWatts=0 ExtSensorsTemp=n/s

Job management commands

sacct: data on the records of use for a job that is completed and job that is still running (sacct -j <jobid>)sstat: statistics for a job that is running

(sstat -j <jobid> --format=AveCPU,AveRSS,AveVMSize, MaxRSS,MaxVMSize)scontrol show: e.g.scontrol show job|partitionscontrol update: change the jobscontrol hold: temporarily stop the jobscontrol release: release the jobscancel: cancel the jobsprio: display job priority

Overview of data and steps for a job: sacct -j <jobid>:

JobID JobName Partition Account AllocCPUS State ExitCode

------------ ---------- ---------- ---------- ---------- ---------- --------

949849 <jobname> gridlong <user> 1 RUNNING 0:0

949849.batch batch <user> 1 RUNNING 0:0

949849.exte+ extern <user> 1 RUNNING 0:0

Display of work statistics: sstat -j <jobid> --format=AveCPU,AveRSS,AveVMSize – with the format option, you can filter displayed data.

AveCPU AveRSS AveVMSize

---------- ---------- ----------

00:00.000 11164K 169616K

Detailed partition overview: scontrol show partition:

PartitionName=gridlong

AllowGroups=ALL AllowAccounts=ALL AllowQos=ALL

AllocNodes=ALL Default=YES QoS=N/A

DefaultTime=00:30:00 DisableRootJobs=NO ExclusiveUser=NO GraceTime=0 Hidden=NO

MaxNodes=UNLIMITED MaxTime=14-00:00:00 MinNodes=0 LLN=NO MaxCPUsPerNode=UNLIMITED

Nodes=nsc-msv0[01-20],nsc-gsv0[01-07],nsc-fp0[01-08]

PriorityJobFactor=1 PriorityTier=1 RootOnly=NO ReqResv=NO OverSubscribe=NO

OverTimeLimit=NONE PreemptMode=OFF

State=UP TotalCPUs=1984 TotalNodes=35 SelectTypeParameters=NONE

JobDefaults=(null)

DefMemPerCPU=2000 MaxMemPerNode=UNLIMITED

TRESBillingWeights=CPU=1.0,Mem=0.25G

Detailed overview of job settings: scontrol show job <jobid>:

JobId=950168 JobName=test

UserId=<user> GroupId=<group> MCS_label=N/A

Priority=4294624219 Nice=0 Account=nsc-users QOS=normal

JobState=PENDING Reason=Priority Dependency=(null)

Requeue=1 Restarts=0 BatchFlag=1 Reboot=0 ExitCode=0:0

RunTime=00:00:00 TimeLimit=01:00:00 TimeMin=N/A

SubmitTime=2020-07-10T12:41:14 EligibleTime=2020-07-10T12:41:14

AccrueTime=2020-07-10T12:41:14

StartTime=Unknown EndTime=Unknown Deadline=N/A

SuspendTime=None SecsPreSuspend=0 LastSchedEval=2020-07-10T12:41:20

Partition=gridlong AllocNode:Sid=ctrl:15247

ReqNodeList=(null) ExcNodeList=(null)

NodeList=(null)

NumNodes=1 NumCPUs=1 NumTasks=1 CPUs/Task=1 ReqB:S:C:T=0:0:*:*

TRES=cpu=1,mem=100M,node=1,billing=1

Socks/Node=* NtasksPerN:B:S:C=0:0:*:* CoreSpec=*

MinCPUsNode=1 MinMemoryCPU=100M MinTmpDiskNode=0

Features=(null) DelayBoot=00:00:00

OverSubscribe=OK Contiguous=0 Licenses=(null) Network=(null)

Command=/home/$USER/dir/test.sh

WorkDir=/home/$USER/dir

StdErr=/home/$USER/dir/slurm-950168.out

StdIn=/dev/null

StdOut=/home/$USER/dir/slurm-950168.out

Power=

MailUser=(null) MailType=NONE

Changing job settings: scontrol update job <jobid> JobName=<jobname>:

JobId=950168 JobName=<jobname>

UserId=<user> GroupId=<group> MCS_label=N/A

Priority=4294624219 Nice=0 Account=nsc-users QOS=normal

JobState=PENDING Reason=Priority Dependency=(null)

Requeue=1 Restarts=0 BatchFlag=1 Reboot=0 ExitCode=0:0

RunTime=00:00:00 TimeLimit=01:00:00 TimeMin=N/A

SubmitTime=2020-07-10T12:41:14 EligibleTime=2020-07-10T12:41:14

AccrueTime=2020-07-10T12:41:14

StartTime=Unknown EndTime=Unknown Deadline=N/A

SuspendTime=None SecsPreSuspend=0 LastSchedEval=2020-07-10T12:41:20

Partition=gridlong AllocNode:Sid=ctrl:15247

ReqNodeList=(null) ExcNodeList=(null)

NodeList=(null)

NumNodes=1 NumCPUs=1 NumTasks=1 CPUs/Task=1 ReqB:S:C:T=0:0:*:*

TRES=cpu=1,mem=1000M,node=1,billing=1

Socks/Node=* NtasksPerN:B:S:C=0:0:*:* CoreSpec=*

MinCPUsNode=1 MinMemoryCPU=100M MinTmpDiskNode=0

Features=(null) DelayBoot=00:00:00

OverSubscribe=OK Contiguous=0 Licenses=(null) Network=(null)

Command=/home/$USER/dir/test.sh

WorkDir=/home/$USER/dir

StdErr=/home/$USER/dir/slurm-950168.out

StdIn=/dev/null

StdOut=/home/$USER/dir/slurm-950168.out

Power=

MailUser=(null) MailType=NONE

Freezing a job: scontrol hold <jobid> – when using the command squeue -j <jobid> you can see that the job has been frozen.

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

950168 gridlong <jobname> <user> PD 0:00 1 (JobHeldUser)

Releasing the frozen job: scontrol release <jobid> – when using the command squeue -j <jobid> you can see that the job has been released.

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

950168 gridlong <jobname> <user> R 0:19 1 nsc-gsv002

Stop a job: scancel <jobid> – example squeue -u <userid> after stopping a job:

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

948702 gridlong <jobname> <user> CG 0:31 1 nsc-gsv003

A detailed overview of the priority of jobs that are waiting to start running: sprio -l:

JOBID PARTITION USER PRIORITY SITE AGE ASSOC FAIRSHARE JOBSIZE PARTITION QOS NICE TRES

959560 gridlong gen0004 1178 0 174 0 213 145 0 0 50 cpu=50,mem=646

959561 gridlong gen0004 1178 0 174 0 213 145 0 0 50 cpu=50,mem=646

Selecting software environment for running a job

- clusters Maister and NSC support the use of containers Singularity – containers intended for general use are available under

/ceph/sys/singularityon the Maister cluster and under/ceph/grid/singularity-imageson the NSC cluster. - software is also available through modules (work in progress):

module avail - some software is compiled to local disks, in

/ceph/grid/software - clusters also support CernVM-FS. The file system is available on /cvmfs

- user has multiple options for adapting the execution environment, that is with the use of Conde, containers Singularity, containers udocker

Selecting nodes for running a job

-wor--nodelist: specifies nodes for running a job-xor--exclude: excludes nodes when running a job (srun --exclude=cn[01,04,08])-C, --constraint=<list>: select the type of node with a specific designation (srun --constraint=infiniband)srun -p grid ...: selecting the partitionsrun --exclusive: starting a job in exclusive mode

Overview of nodes designations sinfo -o %n,%f

Names of nodes depend on the local cluster convention.

Error analysis

- to review the usage of resources, use

sstatandsacct -o outputfile, where you save stdout-v verboseto activate a more detailed text descriptionscontrol show job <jobid>to review task statussqueue -u $USER: task statuses

Additional resources:

+ SLURM Troubleshooting

+ SLURM Job Exit Codes

Types of tasks

Consecutive tasks

#!/bin/bash

#SBATCH --time=4:00:00

#SBATCH --job-name=steps

#SBATCH --nodes=1

#SBATCH --ntasks-per-node=8

#SBATCH --output=job_output.%j.out

# %j will be replaced with the task ID

# According to the default settings, srun will use all resources

#(8 tasks for each job)

srun ./job.1

srun ./job.2

srun ./job.3

Forms of parallelism

- multithreaded jobs

- multicore jobs

- array jobs

- multi node jobs

- hybrid jobs (multithreaded jobs/multicore jobs on multiple threads)

Multithreaded jobs

Multithreaded jobs are jobs that use multiple threads per core. Multiple threads are used with the option --threads-per-core.

Example of a multithreaded job:

#!/bin/bash

#SBATCH --job-name=MultiThreadJob

#SBATCH --threads-per-core=2

#SBATCH --ntasks=2

srun hello.sh

Multicore jobs

For faster processing of demanding jobs, Slurm specifies the number of cores it will use. You can use the option -c or --cpus-per-task to specify how many processors you want to reserve for running a job.

Example of a multicore job:

#!/bin/bash

#SBATCH --job-name=MultiCoreJob

#SBATCH --cpus-per-task=2

#SBATCH --ntasks=2

srun hello.sh

Array jobs

Multi node jobs

Parallel jobs can be run on multiple nodes with the option -N or --nodes.

Example of a job on multiple nodes:

#!/bin/bash

#SBATCH --job-name=MultiNodeJob

#SBATCH --nodes=2

#SBATCH --ntasks=4

#SBATCH --ntasks-per-node=2

srun hello.sh

Hybrid jobs

Hybrid jobs are jobs where you use multiple cores on different nodes.

Example of a hybrid job:

#!/bin/bash

#SBATCH --job-name=HybridJob

#SBATCH --nodes=2

#SBATCH --ntasks=4

#SBATCH --ntasks-per-node=2

#SBATCH --cpus-per-task=3

srun hello.sh

Parallel tasks

#!/bin/bash

#SBATCH --time=4:00:00

#SBATCH --job-name=parallel

#SBATCH --nodes=8

#SBATCH --output=job_output.%j.out

srun -N 2 -n 6 --cpu-bind=cores ./task.1 &

srun -N 4 -n 6 -c 2 --cpu-bind=cores ./task.2 &

srun -N 2 -n 6 --cpu-bind=cores ./task.3 &

wait

Interactive and graphical sessions

Interactive sessions

- You can connect to the allocated nodes directly with the commands

srun --pty bashandsalloc. - You cannot connect to nodes that were not allocated to you and you receive an error message

Access denied by pam_slurm_adopt: you have no active jobs on this node. - The command

srun --pty bashallocates work nodes to you, where it starts a new bash shell. sallocworks in a similar way assrun --pty bash. The difference is thatsallocstarts a bash shell on the login node, which is usable for running GUI programs, but the jobs still run on assigned work nodes.salloccan also start a job at a specific time with the flagsalloc --begin.- If you do not end an interactive session, it will run until the time limit is elapsed.

Example on the NSC cluster: srun --pty bash:

[$USER@nsc1 ~]$ srun --job-name "InteractiveJob" --cpus-per-task 1 --mem-per-cpu 1500 --time 10:00 --pty bash

srun: job <jobid> queued and waiting for resources

srun: job <jobid> has been allocated resources

bash-5.0$

Example on the NSC cluster: salloc:

[$USER@nsc1 ~]$ salloc --job-name "InteractiveJob" --cpus-per-task 1 --mem-per-cpu 1500 --time 10:00

salloc: Pending job allocation <jobid>

salloc: job <jobid> queued and waiting for resources

salloc: job <jobid> has been allocated resources

salloc: Granted job allocation <jobid>

salloc: Waiting for resource configuration

salloc: Nodes nsc-gsv001 are ready for job

bash-5.0$

Graphical sessions X11

- specific programs require interactivity and support for graphical interfaces

- SLURM directly supports interactive sessions with X11 from version 17.11 onwards (before that, some improvising was required)

- Clusters Maister and NSC have an option for _ X11 forwarding_ enabled

- setting

–x11=[batch|first|last|all]allows you to specify in which task/step on which node X11 forwarding will be activated, when there are multiple nodes and tasks allocated (default value:first)

Example on the Maister cluster:

ssh -X rmaister.hpc-rivr.um.si

salloc -n1

srun -x11 ime_programa

Example for the Relion program package (in the Singularity container):

srun --gres=gpu:4 --pty --x11=first

singularity exec --nv

/ceph/sys/singularity/relion-cuda92.sif relion

GPGPU, CUDA, OpenCL

- The Maister cluster has 6 nodes with 4 cards Nvidia Tesla v100

- Two options for starting GPU jobs:

sbatch --gres=gpu:4sbatch --gpus=4 --gpus-per-node=4

- CUDA is installed on the system and uses the compiler /usr/bin/cuda-gcc

Parallel jobs with MPI and InfiniBand

SLURM enables three methods for running MPI jobs:

- Directly by using

srunand API PMI2 or PMIx - SLURM allocates resources to the job, then you run the MPI job with

mpirunwithin the sbatch script - SLURM allocates resources to the job,

mpirunruns tasks through SSH/RSH (these tasks are not monitored by SLURM)

Some options:

#SBATCH --ntasks=16: 16 MPI ranks will be started#SBATCH --cpus-per-task=1: one core per each MPI rank#SBATCH --ntasks-per-socket=8: each core 8 tasks#SBATCH --nodes=1: all will be started on the same core

SLURM PMI/MPI

PMIx is an extended interface for managing processes

| OpenMPI versions | Suppoorted PMI API |

|---|---|

Open MPI <= 1.X |

PMI, ne pa PMIx |

2.X <= Open MPI < 3.X |

PMIx 1.X |

3.X <= Open MPI < 4.X |

PMIx 1.X and 2.X |

Open MPI >= 4.X |

PMIx 3.X |

Which API supports SLURM?

Check withsrun --mpi=list.

Selecting the correct MPI implementation

-

Currently, there are 2 modules for MPI on the Maister cluster:

-

mpi/openmpi-x86\_64: for tasks through TCP mpi/openmpi-4.0.2(default): for tasks through IB

Is the task running on a node with correct hardware?

When using MPI through infiniband, you need to select nodes that have hardware support for InfiniBand--constraint=infiniband

Examples of jobs with MPI

- First, you need MPI libraries:

- Overview of modules:

module avail - Load MPI modul:

module load mpi

- Overview of modules:

- Example MPI

hellompican be downloaded from Wikipedia: http://en.wikipedia.org/wiki/Message_Passing_Interface#Example_program - And compile it with:

mpicc wiki-mpi-example.c -o hello.mpi

(You can also use the following example: MPI hello world.)

MPI hello world

Let's prepare a very simple program in C language, which uses

the mpi library, and name it hello_mpi.c.

/* C Example */

#include <stdio.h>

#include <mpi.h>

int main (argc, argv)

int argc;

char *argv[];

{

int rank, size;

MPI_Init (&argc, &argv); /* starts MPI */

MPI_Comm_rank (MPI_COMM_WORLD, &rank); /* get current process id */

MPI_Comm_size (MPI_COMM_WORLD, &size); /* get number of processes */

printf( "Hello world from process %d of %d\n", rank, size );

MPI_Finalize();

return 0;

}

#!/bin/bash

#SBATCH --job-name=test-mpi

#SBATCH --output=result-mpi.txt

#SBATCH --ntasks=4

#SBATCH --time=10:00

#SBATCH --mem-per-cpu=1000

module load mpi

mpicc example.c -o hello_mpi.mpi

srun --mpi=pmix hello_mpi.mpi

mpiBench

You can also use the existing example, e.g. mpiBench, to check

if you are using the correct library and hardware.

Script sbatch, which will download, compile and sart mpiBench within the job:

#!/bin/bash

#SBATCH --job-name=test-mpi

#SBATCH --output=result-mpi.txt

#SBATCH --ntasks=4

#SBATCH --time=10:00

#SBATCH --mem-per-cpu=1000

module load mpi

git clone https://github.com/LLNL/mpiBench.git

cd mpiBench

make

srun --mpi=pmix --constraint=infiniband -n2 -N2 ./mpiBench

MPI, SLURM, and Singularity

Singularity is a solution for using software in the form of containers, which is adapted to the use in supercomputing environment. Singularity enables the use of transferable containers that can run on a desktop or a laptop, but also support advanced technologies, such as MPI and GPGPU, and can be used with the SLURM system.

More on containers, software, and the Singularity system:

Software on clusters

The Singularity and MPI combination can be used in two ways: * If we use parallelisation within one node, you can use MPI within the container * if you parallelise the job to more nodes, you need to use the MPI at the host

#MPI within a container

srun singularity exec centos7.sif mpirun ./hello

#MPI on multiple nodes

mpirun singularity exec centos7.sif ./hello

(Transferability and interoperability with libraries MPI and GPGPU are partly problematic.)

Demanding jobs

SLURM supports a number of advanced functions, which enable you to automate the circuit, organise data processing, create correlations, enable cancellations and restarting jobs, etc.

Array jobs

- Array jobs are useful if you want to run a number of similar tasks.

- Parameters can be used for automatic selection of data, file names, etc.

- Use is only possible with

sbatch

sbatch --array=1-3 -N1 slurm_skripta.sh

#e.g. that a task with the ID 101 will be created

The following strings will be started:

# array index 1

SLURM_<jobid>=101

SLURM_ARRAY_JOB_ID=42

SLURM_ARRAY_TASK_ID=1

# array index 2

SLURM_<jobid>=102

SLURM_ARRAY_JOB_ID=101

SLURM_ARRAY_TASK_ID=2

# array index 3

SLURM_<jobid>=103

SLURM_ARRAY_JOB_ID=102

SLURM_ARRAY_TASK_ID=3

Starting a task:

#!/bin/bash

#SBATCH --nodes=1

#SBATCH --output=program-%A_%a.out

#SBATCH --error=program-%A_%a.err

#SBATCH --time=00:30:00

#SBATCH --array=101-103

srun --ntasks-per-node=24 \

./program input_${SLURM_ARRAY_TASK_ID}.txt

Jobs dependencies

- the concept of jobs dependencies enables simple files

- dependencies enable managing capacities in jobs within an allocation

- start-up by using

#SBATCH --dependency=<type>has multiple optionsafter:jobID: after the jobID task startsafterany:jobID: after the jobID completesafterok:jobID: after the jobID completes successfullyafternotok:jobID: after the jobID completes unsuccessfullysingleton: after any task with the same name

Other advanced options

SLURM also enables a number of advanced options, such as:

- heterogeneous jobs, where you can use one allocation to manage possibly different tasks and thus improve resource efficiency: https://slurm.schedmd.com/heterogeneous_jobs.html

- control over threading, distribution between cores, cache and processors, as well as other options, which can drastically improve the operation of multithreaded and memory intensive applications (requires an extensive knowledge of the cluster architecture and system configuration): https://slurm.schedmd.com/mc_support.html

- options for resource binding:

https://slurm.schedmd.com/resource_binding.html - alternative options to support parallelisation and MPI:

https://slurm.schedmd.com/mpi_guide.html

Good practices

It is reasonable to run interconnected tasks in the same allocation or as a part of the same job. Individual tasks/steps can be different, distribution of the burden is more logical, and system load is smaller.

Tasks with identical needs by resources and method for calling should be formed as task arrays. Most of the commands can control the entire job or individual task in the array.

If you are using SLURM, you need to adjust to the settings of the cluster and the login node and follow the instructions of cluster administrators. (When using ARC an additional level of abstraction is added and it enables a more comparable method of use.)

- Maister cluster:

- Larger temporary space at the request of

/data1 - Larger I/O: at the request

/ceph/grid/data(or ARC) - More shorter tasks are better than one long one (currently partition long)

- Larger temporary space at the request of