Cluster Hardware Architecture

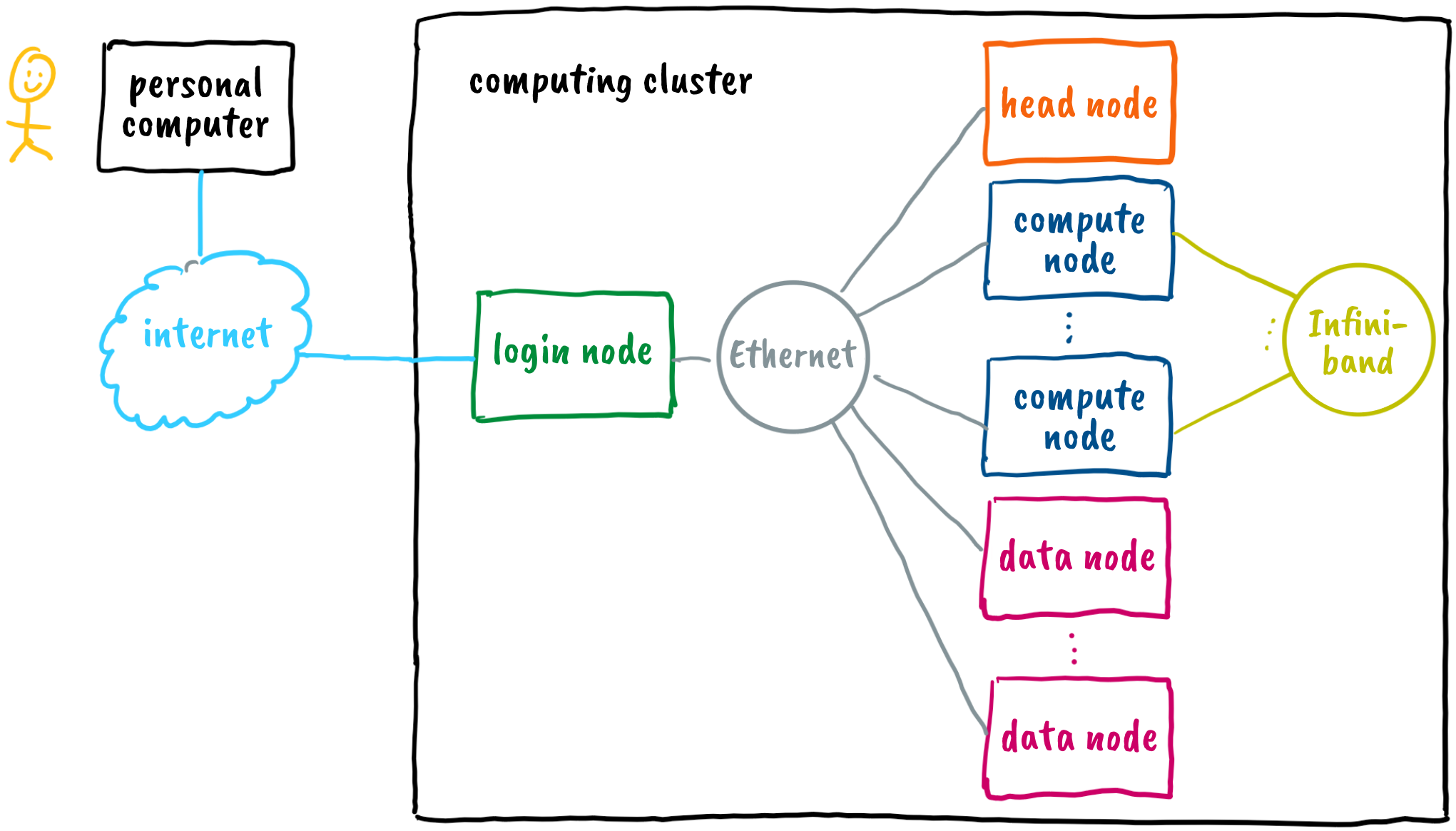

A computer cluster is composed of a set of nodes that are tightly connected in a network. The nodes operate in a similar manner and are composed of the same components found in personal computers: processors, memory, and input/output devices. Clusters are of course superior in terms of the quantity, performance and quality of the integrated elements, but they usually lack I/O devices such as keyboard, mouse and display.

Node Types

There are several types of nodes in clusters, their structure depends on their role in the system. They are important for the user:

- head nodes,

- login nodes,

- compute nodes, and

- data nodes.

The head node keeps the whole cluster running in a coordinated manner. It runs programs that monitor the status of other nodes, distribute jobs to compute nodes, supervise job execution, and perform other management tasks.

Users access the cluster through login node via the SSH protocol. Login node enables users to transfer data and programs to and from the cluster, prepare, monitor, and manage jobs for compute nodes, reserve computational resources on compute nodes, log in to compute nodes, and perform similar activities.

Jobs that are prepared on the login nodes are executed on compute nodes. There are various types of compute nodes available, including usual nodes with processors, high-performance nodes with more memory, and nodes equipped with accelerators such as general-purpose graphics processing units (GPUs). Compute nodes can also be organized into groups or partitions.

Data and programs are stored on data nodes. Data nodes are connected in a distributed file system, such as ceph. The distributed file system is accessible to all login and compute nodes. Files transferred to the cluster through the login node are stored in the distributed file system.

All nodes are interconnected by high-speed network links, typically including Ethernet and sometimes InfiniBand (IB) for efficient communication between compute nodes. Network links are preferably high bandwidth (capable of transferring large amounts of data) and low latency (needing a short time to establish a connection).

Node Architecture

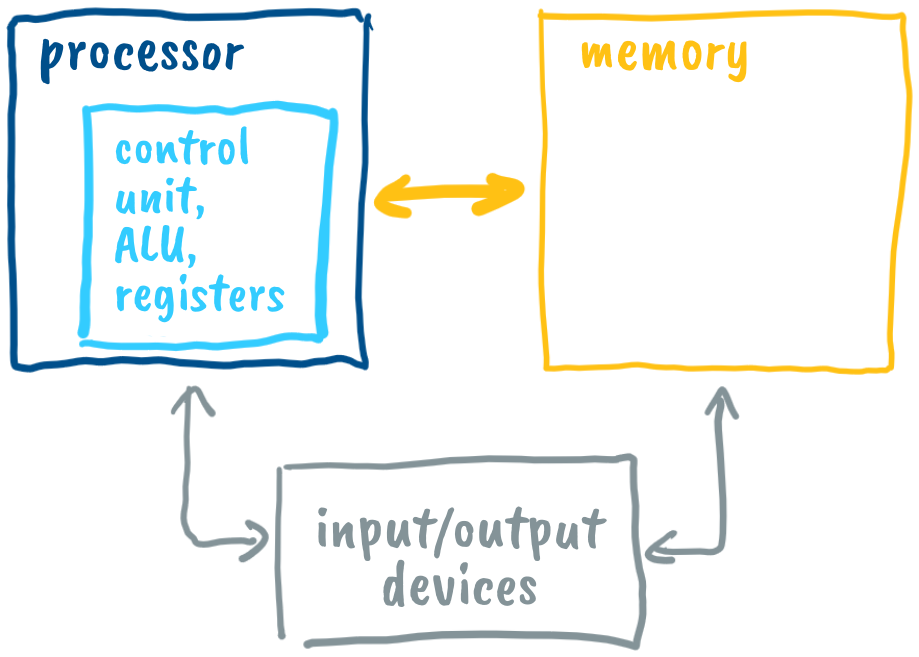

The vast majority of today's computers follow the Von Neumann architecture. In the processor, a control unit ensures the coordinated operation of the system, reads instructions and operands from memory and writes the results to memory. The processor executes instructions in an arithmetic logic unit (ALU), assisted by registers (e.g. tracking program flow, storing intermediate results). The memory stores data - instructions and operands, while input/output units facilitate data transfer between the processor, memory, and external devices.

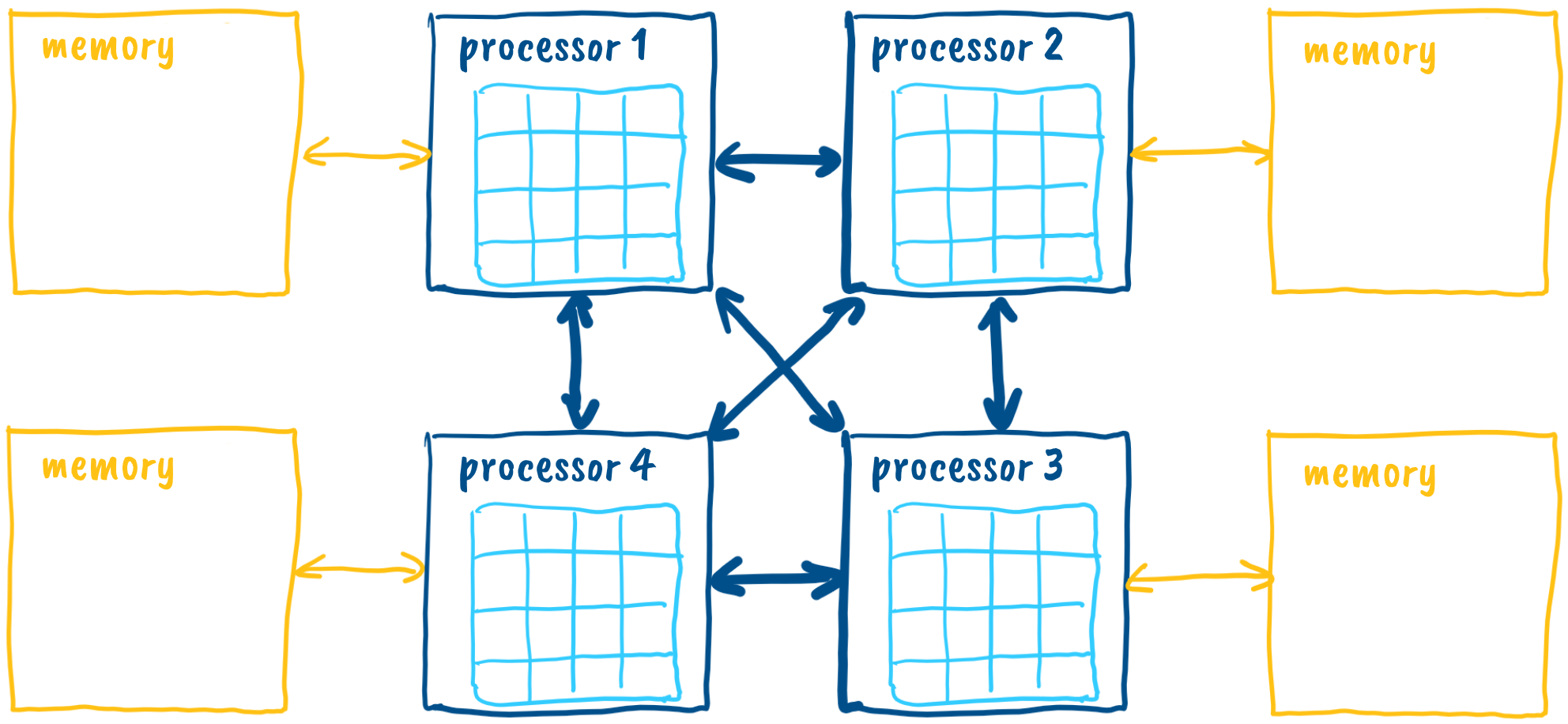

For a long time, computers had a single processor or central processing unit (CPU), which became increasingly powerful over time. To meet the growing demand for processing power and energy efficiency, processors have been divided into multiple cores, which operate in parallel and share a common memory. In addition, some cores have support for parallel execution of instructions, known as hardware threads or simultaneous multithreading (hyper-threading). In modern computer clusters, compute nodes typically have multiple processor sockets. These processors, with their cores, operate in parallel and share a common memory, which is distributed among the processors to enhance efficiency. For example, many compute nodes in the NSC cluster are equipped with four tightly interconnected AMD Opteron 6376 processors, each having 16 cores, resulting in a total of 64 cores per node. Compute nodes in the Maister and Trdina clusters have 128 cores per node.

A modern compute node with two processor sockets, memory and a multitude of GPUs is shown in the figure below.

Graphics Processing Unit

Some nodes are equipped with additional computational accelerators. The most commonly used accelerator is the graphics processing unit (GPU). Originally designed to offload the CPU when rendering graphics on screens, GPUs are now widely utilized for general-purpose computing tasks. When rendering to the screen, they have to perform a multitude of independent calculations for millions of screen pixels. When they are not rendering to the screen, they can only compute. We are talking about General Purpose Graphics Processing Units (GPGPUs). They are perfect when we need to do a lot of similar computations on a large amount of data with few dependencies, such as deep learning in artificial intelligence and video processing.

The architecture of GPU is quite different from that of conventional processors. Therefore, we must extensively rework existing programs to run programs efficiently on GPU.