Execution model1

Graphics processing units with many SPs are ideal for accelerating data-parallel problems. Typical data-parallel problems involve computations with large vectors/matrices or images, where the same operation is performed on thousands or even millions of data simultaneously. To take advantage of such massive parallelism offered to us by GPUs, we need to divide our programs into thousands of threads. As a rule, a thread on a GPU performs a sequence of operations on a particular data (for example, one element of the matrix), and this sequence of operations is usually independent of the same operations performed by other threads on other data. The program written in this way is transferred to a GPU, where the internal thread schedulers assign threads to SMs and SPs.

An execution model provides a view of how a particular computing architecture executes instructions. The CUDA execution model exposes an abstract view of the GPU parallel architecture, allowing you to reason about thread concurrency. The GPUs are built around an array of Streaming Multiprocessors (SM). GPU hardware parallelism is achieved through the replication of this architectural building block. Key components of an SM are:

- Streaming Processors,

- Shared Memory,

- Register File,

- Load/Store Units,

- Special Function Units,

- Warp Scheduler.

Each SM in a GPU is designed to support the concurrent execution of hundreds of threads through the replication of Streaming Processors (SP), and there are generally multiple SMs per GPU, so it is possible to have thousands of threads executing concurrently on a single GPU.

Threads are scheduled in two steps:

-

The programmer must explicitly group individual threads into thread blocks within the kernel. All threads from a thread block will execute on the same SM. As SMs have built-in shared memory that is accessible to all threads within a thread block, the threads will be able to exchange data quickly and easily. In addition, threads on the same SM can be synchronized with each other quite easily. The main scheduler on the GPU will distribute the thread blocks evenly to all SMs. The thread blocks execute on SMs completely independent of each other and in any order. Several thread blocks can be sent to one SM (today, typically a maximum of 16). When a kernel is launched, the thread blocks of that kernel are distributed among available SMs for execution. Once scheduled on an SM, the threads of a thread block execute concurrently only on that assigned SM. Multiple thread blocks may be assigned to the same SM at once and are scheduled based on the availability of SM resources.

-

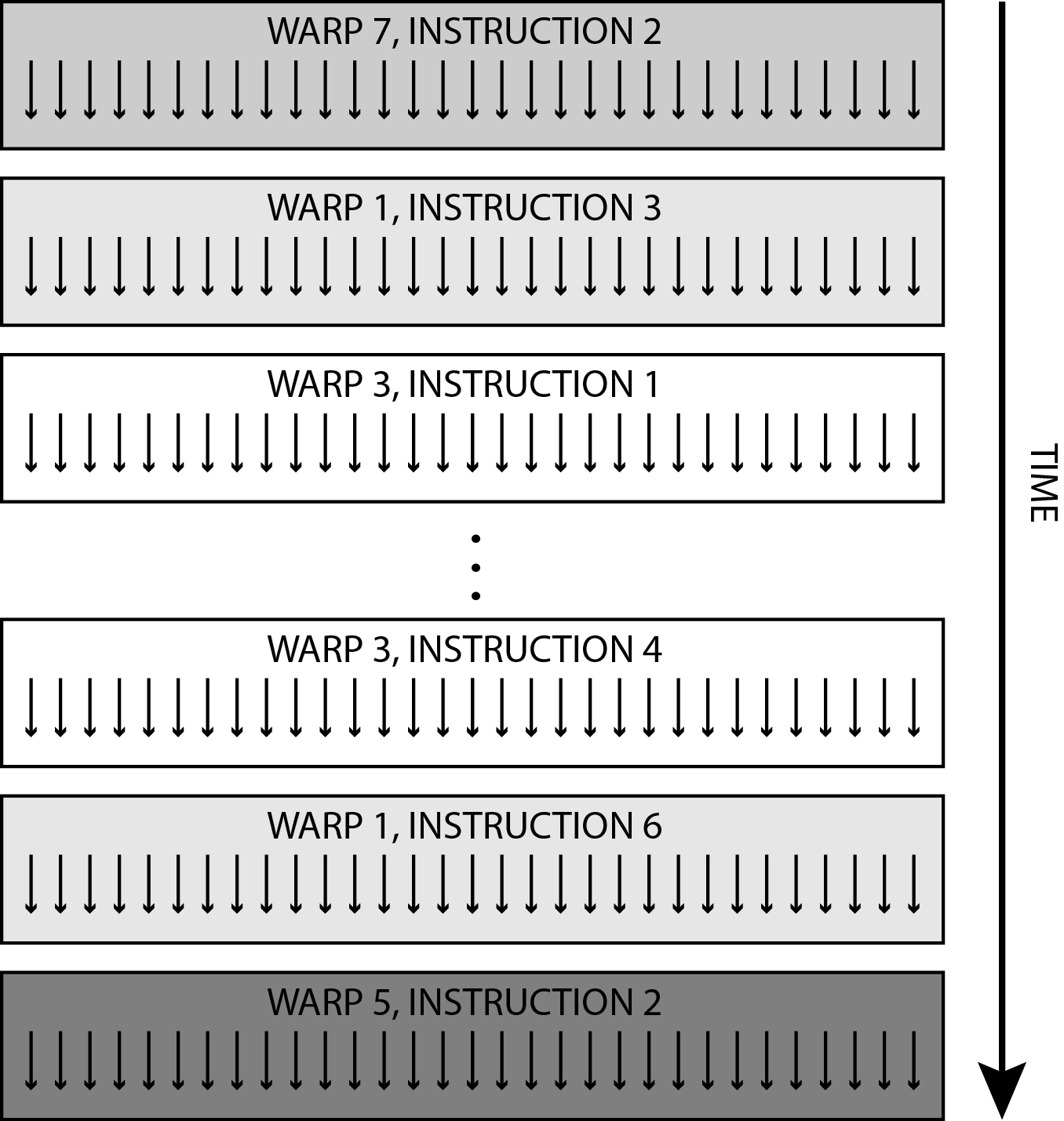

GPUs employ a Single Instruction Multiple Thread (SIMT) architecture to manage and execute threads in groups of 32 called warps. All threads in a warp execute the same instruction at the same time in a lock step fashion. Each thread executing on an SP has its private program counter and registers and executes the current instruction on its own data. Each SM partitions the thread blocks assigned to it into 32-thread warps that it then schedules for execution on available hardware resources (mainly SPs). A thread block is scheduled on only one SM. Once a thread block is scheduled on an SM, it remains there until execution completes. An SM can hold more than one thread block at the same time. In an SM, the thread scheduler (called warp scheduler) sends threads from the same thread block to SPs and other available execution units. It sends exactly 32 threads in each cycle for execution. A warp contains threads of consecutive, increasing thread IDs. All threads in a warp start together at the same program address. Still, they have their own program counter and private registers and are therefore free to branch and execute independently. However, the best performance is achieved when all threads from the same warp execute the same instructions. If the threads in a warp branch differently, their execution is serialized until they all come back to the same instruction, which significantly reduces execution efficiency. Therefore, as programmers, we must be careful that there are no unnecessary branches (if-else statements) inside the thread block. The implementation of the threads in a warp is shown in the figure below.

Warps

A warp is a group of 32 threads from the same thread block that are executed in parallel at the same time. Threads in a warp execute on a so-called lock-step basis. Each warp contains threads of consecutive, increasing work-items IDs. Individual threads within a warp start together at the same program address, but they have their own program counter and private registers and are therefore free to branch and execute independently. However, the best performance is achieved when all threads from the same warp execute the same instructions.

The number 32 comes from hardware and has a significant impact on the performance of the software. You can think of it as the granularity of work processed simultaneously in the lock-step fashion by an SM. Optimizing your workloads to fit within the boundaries of a warp (group of 32 threads) will generally lead to more efficient utilization of GPU compute resources.

Depending on the hardware, the number of threads in one thread block is limited. There is also a limited number of thread blocks that are assigned to one SM at the same time and the number of threads that are allocated to one SM. While warps within a thread block may be scheduled in any order, the number of active warps is limited by SM resources. When a warp idles for any reason, the SM is free to schedule another available warp from any thread block that is resident on the same SM. Switching between concurrent warps has no overhead because hardware resources are partitioned among all threads and blocks on an SM, so the state of the newly scheduled warp is already stored on the SM.

Since 2010 NVIDIA has introduced seven different GPU architectures, with each successive generation having more processing power and software features. The architectures are named after famous scientists: Tesla, Fermi, Kepler, Maxwell, Pascal, Turing and Ampere. The specific capability of a particular architecture is known as its Compute Capability or CC, which is specified by a monotonically increasing number. For example, the most recent generation Ampere has a CC value of 8.0.Let's have a look at two different GPU architectures, Nvidia Fermi and Nvidia Kepler, namely.

Fermi Microarchitecture

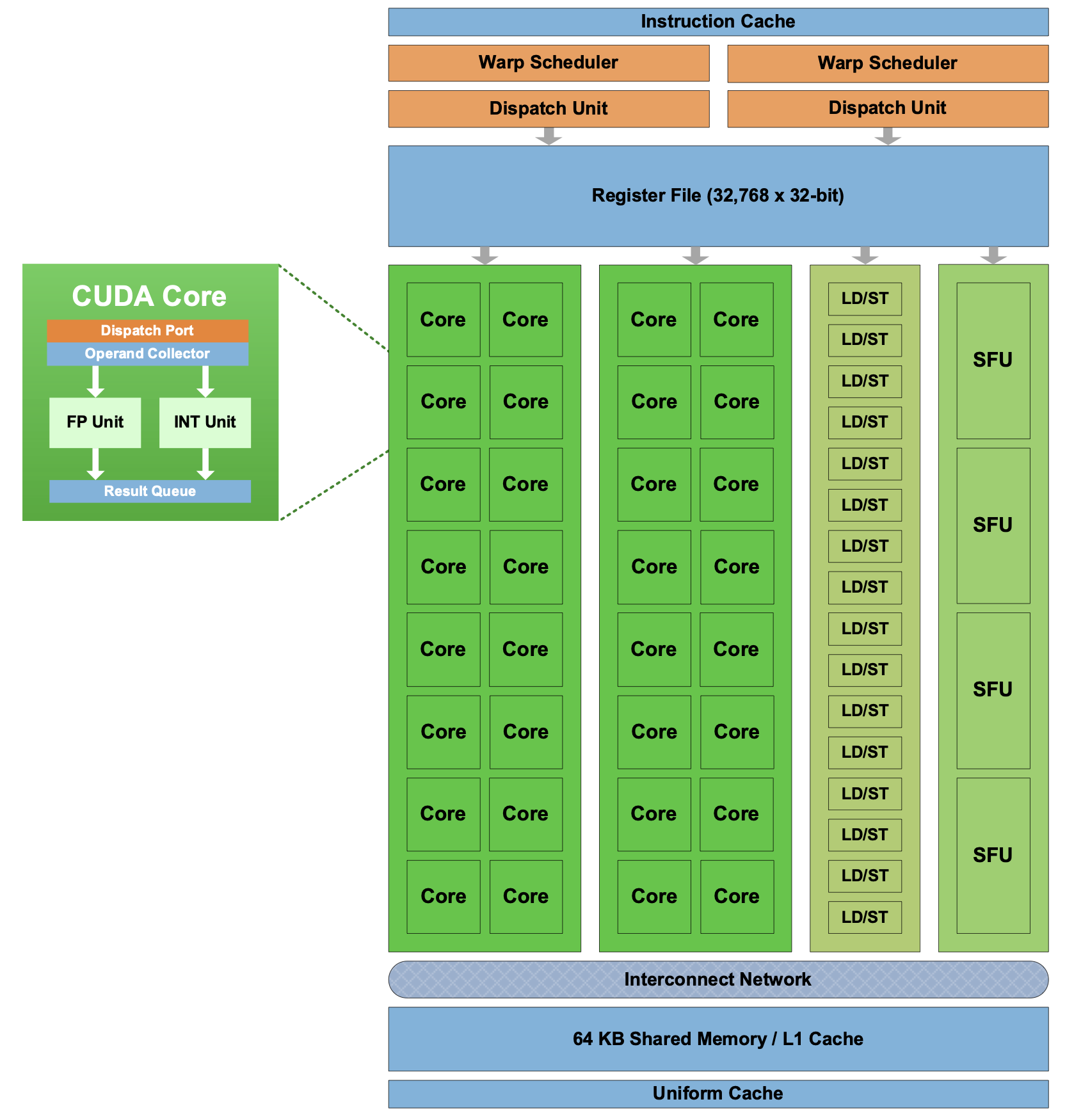

Fermi features up to 512 accelerator cores, called CUDA cores. Each CUDA core comprises an integer ALU and a floating-point unit (FPU) that executes one integer or floating-point instruction per clock cycle. Fermi has 16 streaming multiprocessors (SM), each with 32 CUDA cores. Each streaming multiprocessor has 16 load/store units that calculate source and destination addresses for 16 threads (a half-warp) per clock cycle. In addition, special function units (SFUs) execute special instructions such as sine, cosine, square root, etc. The figure below shows the Fermi SM (Source: NVIDIA).

Each Fermi SM features two warp schedulers and two instruction dispatch units. When a thread block is assigned to an SM, all threads in a thread block are divided into warps. The two warp schedulers select two warps and issue one instruction from each warp to a group of 16 CUDA cores, 16 load/store units, or four special function units. The Fermi architecture can simultaneously handle 48 warps per SM for a total of 1536 threads in a single SM at a time.

Within the SM, 32 cores are divided into two execution blocks of 16 cores each. Besides, there are two other execution blocks: a block of 16 load-store units and a block of four SFUs. Hence, there are four execution blocks per SM. Fermi’s dual warp scheduler selects two warps and issues one instruction from each warp to the execution blocks. Because warps execute independently, Fermi’s scheduler does not need to check for dependencies within the instruction stream.

Most instructions can be dual issued: two integer instructions, two floating instructions, or a mix of integer, floating-point, load, store, and SFU instructions can be issued concurrently. In each cycle, a total of 32 instructions can be dispatched from one or two warps to any two of the four execution blocks within a Fermi SM. It takes two cycles for the 32 instructions in each warp to execute on the cores or load/store units. A warp of 32 special-function instructions is issued in a single cycle but takes eight cycles to complete on the four SFUs. The figure below shows how instructions are issued to the execution blocks (Source: NVIDIA).

Kepler microarchitecture

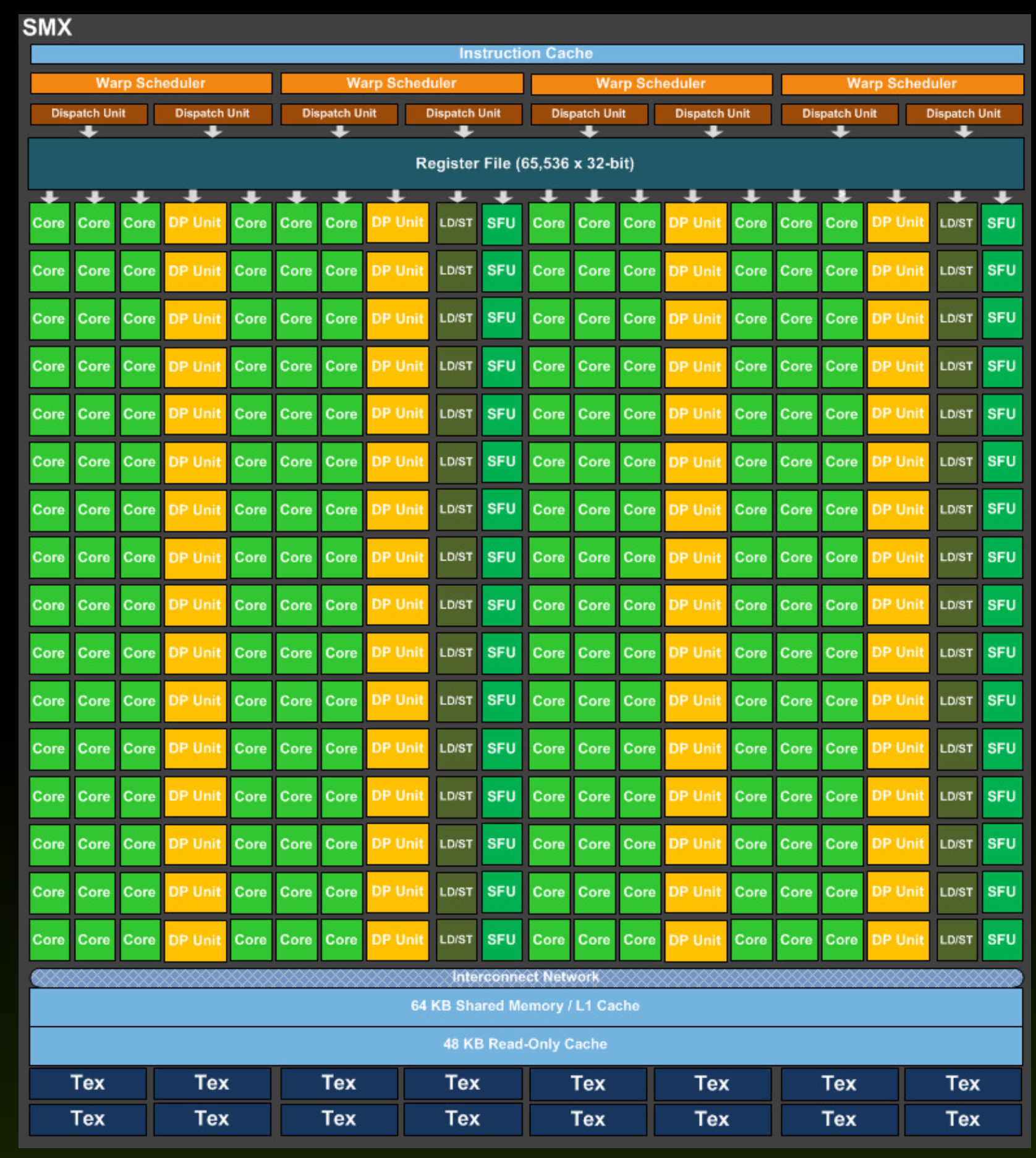

Kepler contains 15 streaming multiprocessors (SMs). Each Kepler SM unit (presented in the figure below) consists of 192 single-precision CUDA cores, 64 double-precision units, 32 special function units (SFU), and 32 load/store units.

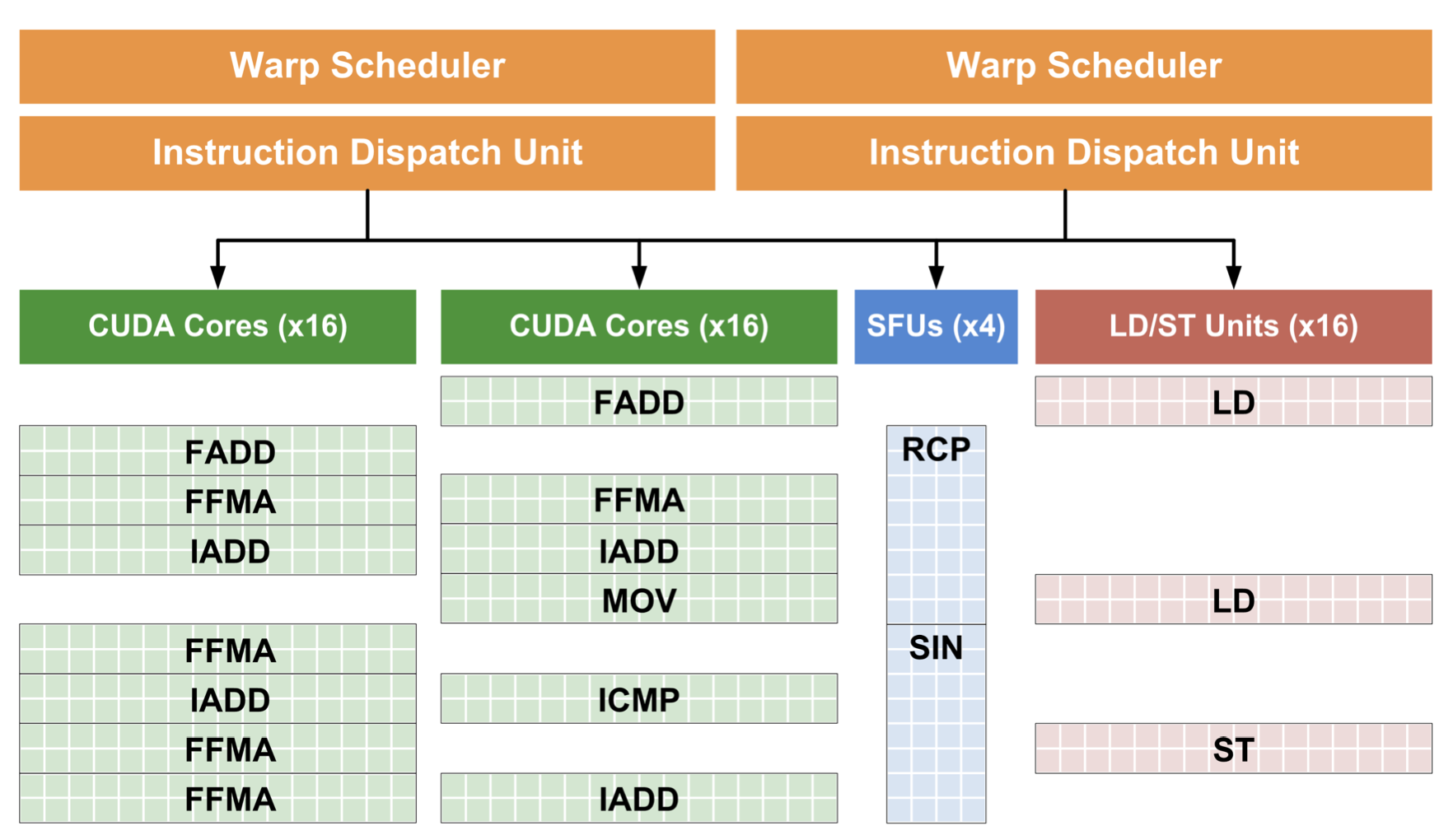

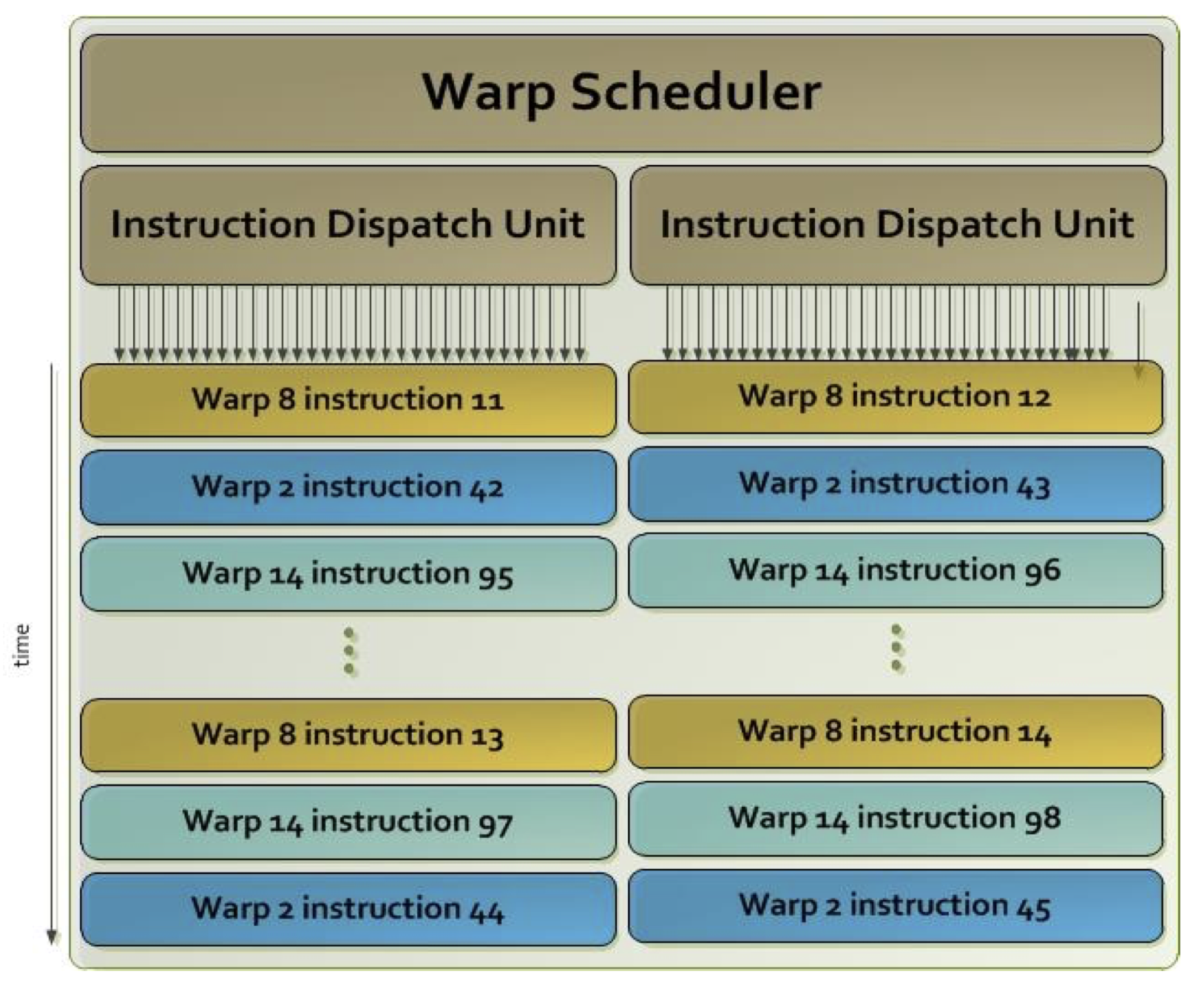

Furthermore, each Kepler SM includes four warp schedulers and eight instruction dispatchers, enabling four warps to be issued and executed concurrently on a single SM. The Kepler architecture can schedule 64 warps per SM for a total of 2048 threads in a single SM at a time. In addition, the Kepler architecture increases the register file size to 64K, compared to 32K on Fermi. The following image presents the warp scheduling in the Kepler SM:

From the above image, we can see that two instructions from the same warp are issued simultaneously. Of course, the order of execution of warps is arbitrary. Usually, it depends on the operands' readiness and the required processing units' availability.

The following table summarizes the key microarchitectural capabilities of the Kepler and the most recent Ampere microarchitectures:

| GPU Feature | Kepler | Ampere |

|---|---|---|

| Threads / warp | 32 | 32 |

| Max warps / SM | 64 | 64 |

| Max threads / SM | 2048 | 2048 |

| Max thread block size | 1024 | 1024 |

| Max thread blocks / SM | 16 | 32 |

| Max 32-bit registers / SM | 65536 | 65536 |

| Max registers / thread | 255 | 255 |

| Shared Memory Size / SM | 48 kB | 164 kB |

The hardware resources limit the maximum number of thread blocks per SM. Each SM has a limited number of registers and a limited amount of local memory. For example, no more than 16 thread blocks can run simultaneously on a single SM with the Kepler microarchitecture. When programming, we usually explicitly group threads into thread blocks of a size that is smaller than the max threads per block for a given microarchitecture. For example, suppose that the maximum number of threads per block for a given microarchitecture is 1024. In that case, we group only 128 or 256 threads into a thread block. In this way, we can have more thread blocks in one SM (e.g. if there are 256 threads in a thread block, then we can have eight thread blocks in an SM resulting in 2048 threads per SM). We usually want to keep as many active blocks and warps as possible because this affects occupancy. Occupancy is a ratio of active warps per SM to the maximum number of allowed warps. Keeping the occupancy high, we can hide latency when executing instructions. Recall that executing other warps when one warp is paused is the only way to hide latency and keep hardware busy.

Volta microarchitecture

Let's look at the Nvidia Quadro GV100 GPU structure shown in the figure below. It contains 6 GPU Processing Clusters (GPCs), composed of 14 streaming processors (SMs) organized in pairs called Texture Processing Clusters (TPCs). Altogether, the Quadro GV100 GPU consists of 84 SMs. The Tesla V100 accelerator used in supercomputers consists of 80 SMs.

The figure below shows the structure of the Volta SM. It contains a variety of streaming processors (SPs) or cores: 64 integer cores (INT), 64 single-precision floating-point cores (FP32), 32 double-precision floating-point cores (FP64), 8 tensor cores, and 4 special function units (SFU).

Kernel execution

A key component of the CUDA programming model is the kernel — the code that runs on the GPU device. In the kernel, we must explicitly write what each thread does.

When a kernel runs on the GPU, the thread scheduler in the image above labelled as Giga Thread Engine schedules the thread blocks to the streaming multiprocessors. Then a streaming multiprocessor internally schedules the threads to streaming processors (SPs) or cores.

The number of threads that GPU schedules and executes simultaneously is limited. A limited number of threads can also be scheduled and executed by SMs. The next section will give a more detailed description of kernel execution, including thread scheduling and execution limitations.

Further readings:

- NVIDIA’s Next Generation CUDA Compute Architecture: Fermi

- NVIDIA’s Fermi: The First Complete GPU Computing Architecture

- NVIDIA’s Next Generation CUDA Compute Architecture: Kepler GK110/210

- NVIDIA TESLA V100 GPU ARCHITECTURE

- NVIDIA AMPERE GA102 GPU ARCHITECTURE

- NVIDIA A100 Tensor Core GPUArchitecture

- Inside the NVIDIA Ampere Architecture

-

© Patricio Bulić, University of Ljubljana, Faculty of Computer and Information Science. The material is published under license CC BY-NC-SA 4.0. ↩