Key Architectural Features Enabling ILP

The successful implementation of ILP relies on a sophisticated interplay of hardware features, each designed to address specific challenges in parallel instruction execution. These features have evolved over decades of processor design, each building upon previous innovations while introducing new capabilities.

Pipelining

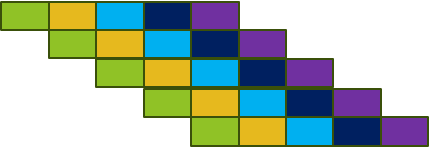

Pipelining is at the heart of modern processor architecture, a fundamental technique that transforms instruction execution from a sequential process into something more akin to an assembly line. Just as a car factory becomes more efficient by having different stations working on different vehicles simultaneously, pipelining allows a processor to work on multiple instructions at different stages of completion.

The classic RISC pipeline consists of five stages: fetch, decode, execute, memory access, and write-back. Each stage performs a specific part of instruction processing. During the fetch stage, the processor retrieves the instruction from memory. The decode stage interprets the instruction, identifying what operation needs to be performed and what resources it requires. The execute stage performs the actual computation, while the memory access stage handles any necessary data transfers with memory. Finally, the write-back stage stores the results in the appropriate registers.

Consider how this works in practice. While one instruction is being fetched, the previous instruction can be in the decode stage, the one before that in the execute stage, and so on. This overlap allows the processor to potentially complete one instruction per clock cycle, even though each instruction takes multiple cycles to execute fully. The efficiency gained through pipelining becomes particularly apparent when processing large sequences of instructions, such as in-loop operations or straight-line code.

Superscalar Execution

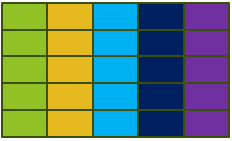

Superscalar execution takes parallelism a step further by implementing multiple execution units within the processor. This architecture allows the processor to issue and execute multiple instructions simultaneously, significantly increasing potential throughput. Think of it as having multiple assembly lines running in parallel, each capable of handling different types of work.

A modern superscalar processor might include several arithmetic logic units (ALUs) for integer operations, multiple floating-point units (FPUs) for mathematical calculations, and dedicated units for memory operations. This diversity of execution units allows different types of instructions to proceed simultaneously. For example, while one unit performs an addition operation, another might calculate a floating-point multiplication, and a third could load data from memory.

However, superscalar execution introduces new complexities in instruction scheduling and resource allocation. The processor must determine which instructions can safely execute in parallel, considering both data dependencies and the availability of appropriate execution units. This requires sophisticated instruction scheduling logic to examine multiple instructions simultaneously and make rapid decisions about their execution ordering.

Out-of-Order Execution

Out-of-order execution represents one of the most sophisticated features in modern processor design, fundamentally changing how we think about program execution. This technique allows the processor to execute instructions in a different order than they appear in the program, as long as doing so does not violate data dependencies.

To understand the power of out-of-order execution, consider a sequence of instructions where several independent calculations follow a long-latency operation (like a memory load). Rather than waiting for the load to complete, the processor can execute the subsequent calculations immediately if they do not depend on the loaded data. This ability to work around stalls by finding useful work is crucial for maintaining high performance in modern processors.

The implementation of out-of-order execution requires several sophisticated mechanisms working in concert:

- Instruction Window: Keeps track of instructions in flight, maintaining information about their dependencies and execution status.

- Reservation Stations: Hold instructions waiting for their operands to become available.

- Commit Unit: Ensures that instructions are completed in their original program order, maintaining precise execution behaviour.

Register Renaming

Register renaming represents a crucial innovation that helps enable both out-of-order execution and increased instruction-level parallelism. This technique addresses a fundamental limitation in traditional processor architectures: the artificial constraints created by having a limited number of architectural registers.

In early processor designs, instructions using the same register names would be forced to execute in order, even if there was no true data dependency between them. Register renaming eliminates these false dependencies by maintaining a larger pool of physical registers than architectural registers and dynamically mapping between them.

Consider a simple example:

ADD R1, R2, R3 // R1 = R2 + R3

MUL R1, R4, R5 // R1 = R4 * R5

Without register renaming, the MUL instruction would have to wait for the ADD to complete because they both write to R1. With register renaming, the processor can assign different physical registers to each instruction's result, allowing them to execute in parallel if their input operands are ready.

Branch Prediction

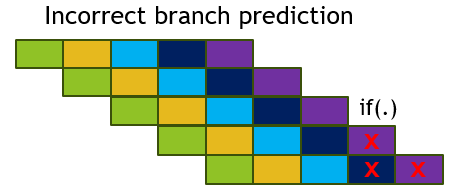

Branch prediction is a key feature that addresses control dependencies, which can otherwise stall instruction pipelines and reduce overall performance. When a processor encounters a branch instruction, such as a conditional statement (if-else), it must decide which path to follow. If the processor waits for the condition to be resolved before proceeding, valuable clock cycles are wasted.

To mitigate this, modern processors employ branch prediction techniques to guess the likely outcome of the branch and continue execution along the predicted path. Two common branch prediction strategies include:

- Static Prediction: Uses fixed rules, such as always predicting that backward branches (often associated with loops) will be taken.

- Dynamic Prediction: Relies on hardware mechanisms to learn and adapt based on past branch outcomes. One popular technique is the two-level adaptive predictor, which uses pattern history to improve accuracy.

Highly accurate branch prediction reduces the frequency of pipeline stalls and allows instructions to be fetched and executed speculatively. However, incorrect predictions require rollback mechanisms, which speculative execution handles effectively.

Speculative Execution

Speculative execution works in tandem with branch prediction to further exploit ILP by executing instructions along the predicted path before the outcome of the branch is known. This technique allows processors to "speculate" and execute instructions that may or may not be needed, hiding latency and improving throughput.

If the branch prediction is correct, the results of speculative execution are committed to the processor's state, effectively gaining performance benefits by having completed the work ahead of time. If the prediction is incorrect, the processor discards the speculative results and restores the correct program state using precise rollback mechanisms.

Speculative execution requires careful coordination to ensure incorrect predictions do not corrupt the processor's state. Hardware mechanisms such as reorder buffers and checkpointing are employed to manage this process efficiently.

The combination of branch prediction and speculative execution has significantly contributed to the performance gains in modern processors, particularly in applications with frequent branches and complex control flows.