FLYNN'S TAXONOMY: A GUIDE TO COMPUTER ARCHITECTURES

Author: Luka Leskovec

Introduction

Scientists and engineers typically have an insatiable need for computational power. From scientific simulations to artificial intelligence, the demand for faster and more efficient computing continues to grow. Historically, this demand has been met by the exponential increase in computing power described by Moore’s Law:

The number of transistors in an integrated circuit would double approximately every two years.

However, as physical limits to transistor scaling have begun to emerge (i.e., we cannot make the gates any smaller), maximizing computational efficiency has become increasingly dependent on how well software utilizes hardware. Understanding the architecture of processors is crucial for optimizing performance and ensuring that software runs efficiently.

Let’s begin with the basic elements of a computer:

-

CPU (Central Processing Unit) – the "brain" of the computer, responsible for executing instructions.

-

RAM (Random Access Memory) – very fast, similar to human short-term memory.

-

Storage – traditionally Hard Disk Drives (HDD), but nowadays Solid State Drives (SSD), still colloquially called "hard drives."

-

GPU (Graphical Processing Unit) – a processor designed for rendering images, videos, and animations. Very good a simple instructions, but very poor for complicated instructions.

-

Motherboard (MB) – connects all components.

-

Power Supply Unit (PSU) – provides power.

-

Computer Case – houses the components (desktop tower or laptop).

The main part of every computer is the processor, (CPU), and is a crucial component in every computing device, because it is responsible for executing instructions, from moving your mouse around the screen, to reading and processing the data you have stored in your files. The other important components are RAM - Random Access Memory, which is very fast memory, where data you need quick access to is stored - in that sense it is somewhat similar to the human short-term memory. Storage is used to store large amounts of data (like scientific datasets), and used to be a hard drive (Hard Disk Drive - HDD) made from spinning disk, but these days they are mostly solid state drives, (SSD). Colloquially we still call them hard drives though. Often - but not in all types of computers we also have a graphics card (GPU), a component that houses the graphical processing unit which often comes with its own memory. The GPU is very good at linear and tensor algebra - that is why it is great at rendering images and Artificial Inteligence. All of these components are connected with a motherboard (MB), powered by a power supply unit (PSU) and housed in a computer case - either the tower on/underneath your desk or a laptop.

Flynn's taxonomy, however, concerns the processor (CPU) and, to some extent, the GPU. We will focus on the CPU and comment on the GPU.

Processor Pipelines

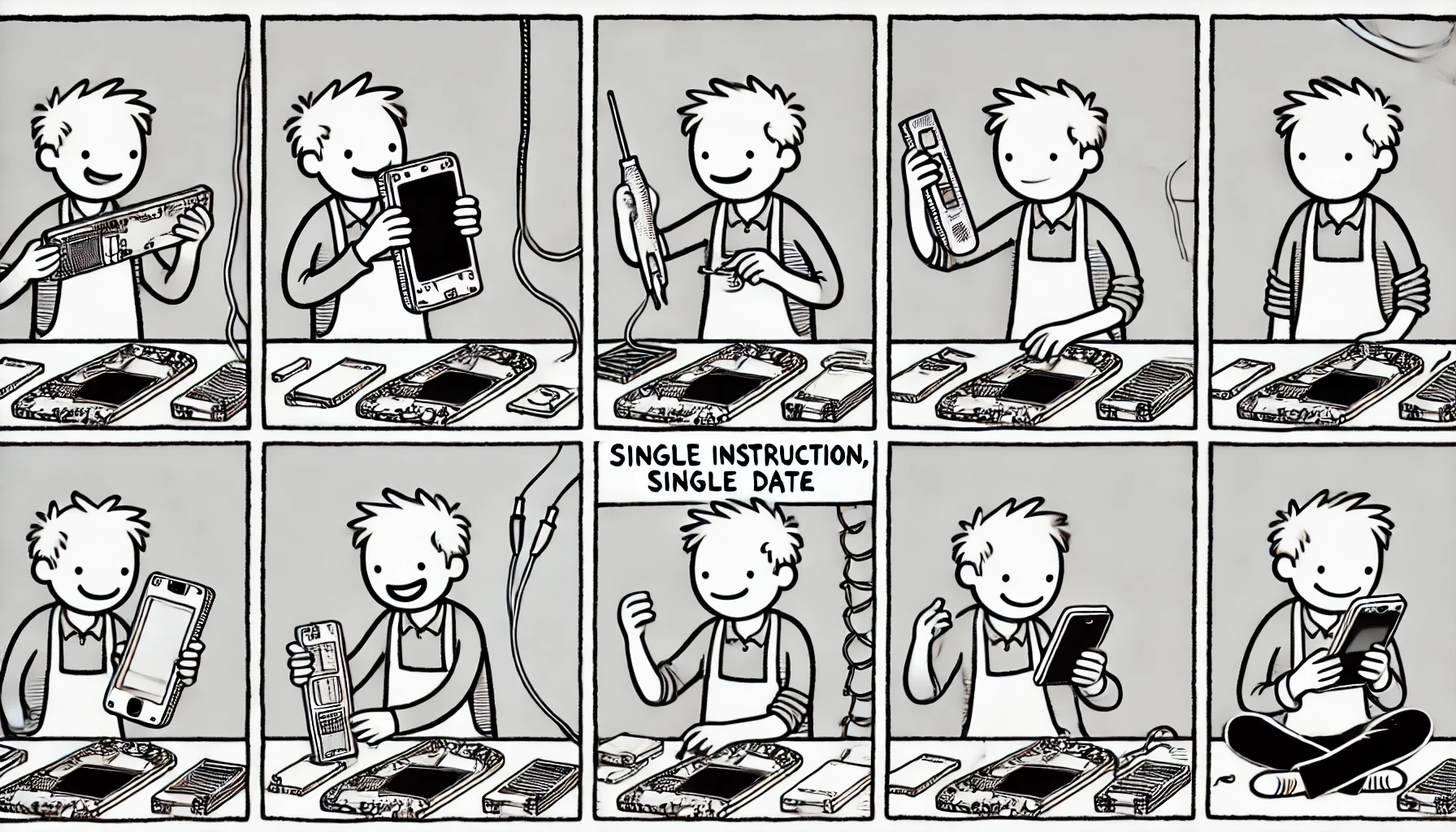

The processor is made up of a pipeline, similar to an assembly line in a factory. Here we defer to an analogy of an assembly line in a factory, to explain how pipelines work. In an assembly line, each worker performs a specific task on components passing along the line. Similarly, a processor pipeline has different stages where each stage performs a specific operation on the data according to the instructions.

Example: Simplified Mobile Phone Assembly

A simple example is the assembly of a mobile phone, which involves four stages:

- Stage i: Installing the battery in the case.

- Stage ii: Connecting the battery with the motherboard.

- Stage iii: Connecting the screen to the motherboard.

- Stage iv: Installing the screen in the case.

From the perspective of a worker:

-

the worker at stage i picks up the battery and screws it into the case, then places the case on the conveyor belt.

-

the worker at stage ii picks up the case from the conveyor belt and connects the battery wires to the motherboard; then places the phone on the conveyor belt.

-

the worker at stage iii picks up the phone from the conveyor belt and connects the screen to the motherboard; then places the phone on the conveyor belt.

-

the worker at stage iv picks up the phone from the conveyor belt and fastens the screen in the case it.

Example: Arithmetic Calculation in a Processor

A more involved, but easier to generalize, example is the calculation of an arithmetic expression in a processor. Consider evaluating the function:

y = 3 * (2 - x) / 4

For x = 3, the steps are:

-

Stage i: Compute

-x, giving-3. -

Stage ii: Add

2, giving-1. -

Stage iii: Multiply by

3, giving-3. -

Stage iv: Divide by

4, yieldingy = 0.75.

Each stage in the pipeline is made up of different components, such as the Arithmetic Logic Unit (ALU), which performs arithmetic operations, and the Control Unit, which manages the flow of data between the stages. Depending on the processor, the pipeline can have different numbers of stages, each optimized for specific tasks. In the example above, each stage is performed sequentially, with the output of one stage becoming the input for the next. This is an example of a Single Instruction, Single Data (SISD) pipeline. When we have a large set of x that we want to compute y for the SISD pipeline is not efficient, because it would take a long time to compute all the y values. This is where the Flynn's taxonomy comes in.

Flynn's Taxonomy

Introduced in 1966 by Michael J. Flynn, this classification categorizes processor pipeline structures based on the number of instructions executed at the same time and the number of data values processed.

1. Single Instruction, Single Data (SISD)

- A single instruction is applied to a single piece of data.

- Example:

y = (3 * (2 - x) / 4)forx = 3. - Found in home computers of the 90s and early 2000s (e.g., Intel 386, 486, Pentium I, II, III).

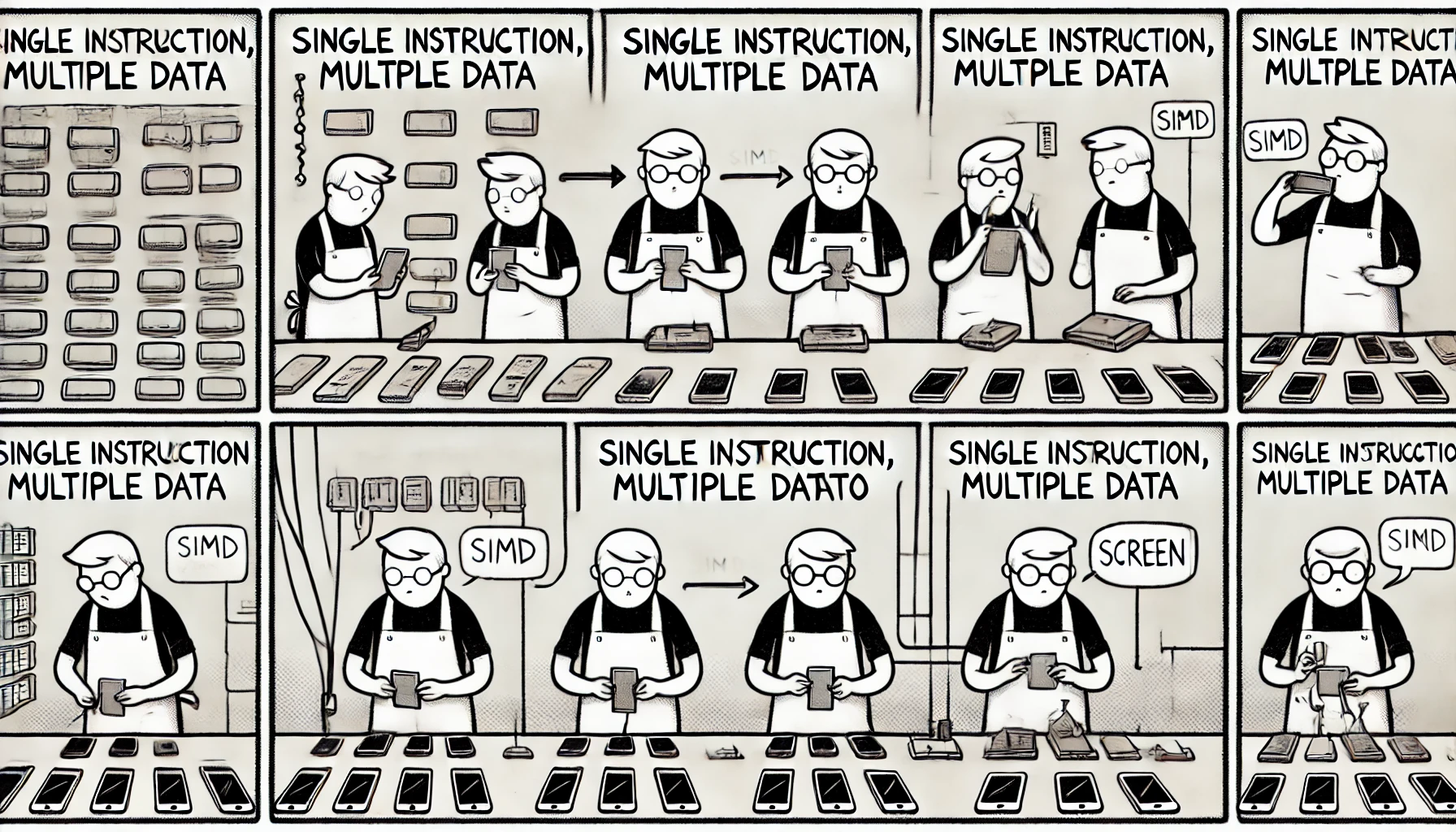

2. Single Instruction, Multiple Data (SIMD)

- A single instruction is applied to multiple data elements simultaneously.

- Example:

x = [1, 2, 3], computey = (3 * (2 - x) / 4)for all values at once. - Used in modern CPUs (AMD & Intel) and GPUs (AMD & NVIDIA).

- In the context of the worker and phones, we now have one worker making multiple phones at the same time, but all the phones are the same.

3. Multiple Instruction, Single Data (MISD)

- Multiple instructions operate on a single data value.

- Example: Compute

yusing different formulations: y = 0.75 * (2 - x)y = 1.5 - 0.75 * xy = (3 * (2 - x) / 4)- Rarely used; a great example is spaceflight - e.g. the Space Shuttle flight control computers, where radiation might cause errors in the calculation. Fault tolerance is achieved by using the same data and then performing the calculations twice or thrice.

- The analalogy of the worker and phones is no longer easy to apply at this point - but the idea is clear.

4. Multiple Instruction, Multiple Data (MIMD)

- Multiple instructions operate on multiple data elements.

- Common in multi-core processors (e.g., Ryzen 5 7600 has 6 SIMD cores that can work independently or together).

GPUs in Flynn's Taxonomy

To improve our overview of Flynn's taxonomy, we will briefly discuss the role of GPUs in this classification. Note, this is not a strict classification, as GPUs exhibit behavior that can be classified under both SIMD and MIMD.

1. SIMD (Single Instruction, Multiple Data) – Core Functionality

NVIDIA GPUs primarily operate under SIMD. They execute the same instruction across multiple data elements in parallel. The NVIDIA GPU is divided into CUDA cores, grouped into Streaming Multiprocessors (SMs), which function as simplified CPUs capable of handling many operations per unit time.

2. MIMD (Multiple Instruction, Multiple Data) – Advanced Behavior

Though primarily SIMD, NVIDIA GPUs can exhibit MIMD-like behavior due to SIMT (Single Instruction, Multiple Threads) execution. Threads within a Streaming Multiprocessor (SM) can take different execution paths, enabling MIMD-like operations.