Cluster Hardware Architecture

Authors: Davor Sluga, Ratko Pilipović

Image generated with artificial intelligence.

Image generated with artificial intelligence.

Imagine a restaurant where all employees work together to provide guests with an unforgettable culinary experience. When seated, guests place their orders with the waiters. Waiters collect orders from all guests and forward them to the head chef. The head chef makes key decisions: distributing the preparation of ordered dishes among cooking teams while keeping track of required ingredients. In the kitchen, work is divided by specialization – some chefs prepare main courses, others desserts, and others side dishes. Chefs work simultaneously, each on their part of the order, while the head chef coordinates them to ensure all dishes are completed on time. The pantry ensures ingredients are readily available and enables smooth workflow.

The introductory paragraph illustrates the operation of a modern supercomputer:

- Restaurant guests represent users,

- waiters represent login nodes,

- the head chef represents the head node,

- cooking teams represent compute nodes, and

- the pantry represents data nodes.

In the following, we will learn in more detail about the structure and individual components of a modern supercomputer or computer cluster. The term computer cluster stems from the architecture of a supercomputer. Nowadays it is a collection of various subsystems (computers) that are interconnected through appropriate hardware and software in such a way that they can solve humanity's most challenging problems in a coordinated and efficient manner.

Node Types

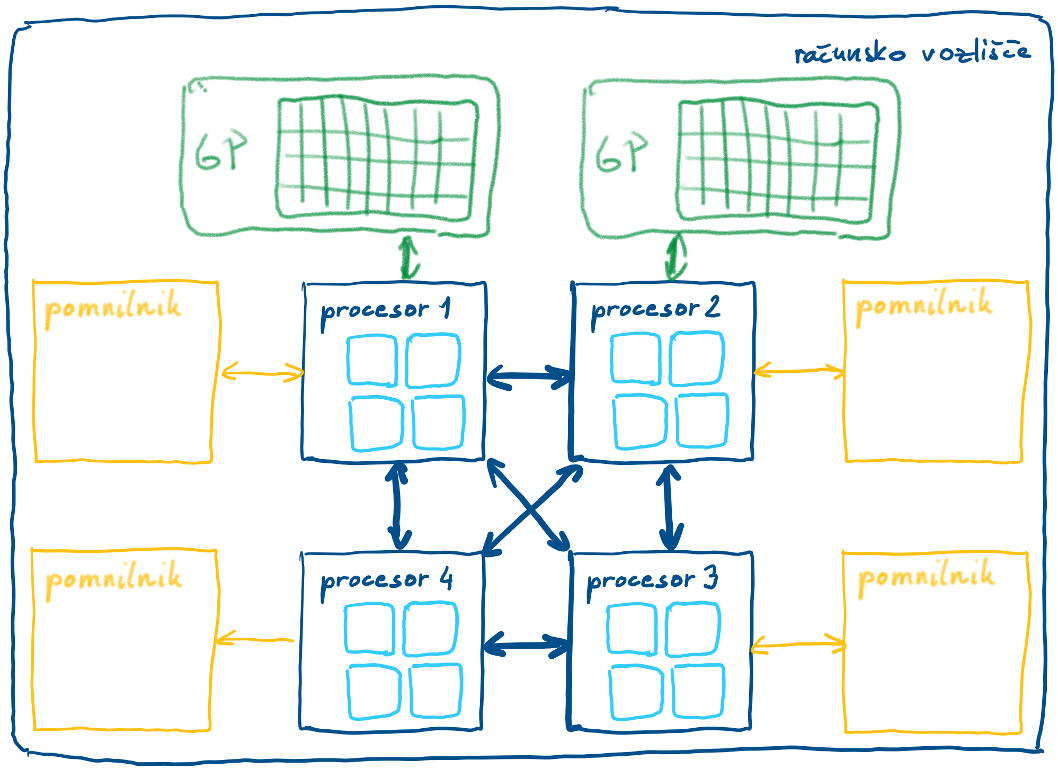

A computer cluster is composed of a set of nodes that are tightly connected in a network. The nodes operate in a similar manner and are composed of the same components found in personal computers: processors, memory, and input/output devices. Clusters are of course superior in terms of the quantity, performance and quality of the integrated elements, but they usually lack I/O devices such as keyboard, mouse and display. As mentioned before, there are several types of nodes in clusters, their structure depends on their role in the system. They are important for the user:

- head nodes,

- login nodes,

- compute nodes, and

- data nodes.

The head node keeps the whole cluster running in a coordinated manner. It runs programs that monitor the status of other nodes, distribute jobs to compute nodes, supervise job execution, and perform other management tasks.

Users access the cluster through login node via the SSH protocol. Login node enables users to transfer data and programs to and from the cluster, prepare, monitor, and manage jobs for compute nodes, reserve computational resources on compute nodes, log in to compute nodes, and perform similar activities.

Jobs that are prepared on the login nodes are executed on compute nodes. There are various types of compute nodes available, including usual nodes with processors, high-performance nodes with more memory, and nodes equipped with accelerators such as general-purpose graphics processing units (GPUs). Compute nodes can also be organized into groups or partitions.

Data and programs are stored on data nodes. Data nodes are connected in a distributed file system, such as ceph. The distributed file system is accessible to all login and compute nodes. Files transferred to the cluster through the login node are stored in the distributed file system.

In computer clusters, nodes are placed in server racks, which greatly facilitate the arrangement and organization of hardware. Each rack contains multiple blades, with each blade capable of housing multiple nodes. This modular design enables easy system expansion and maintenance, as blades can be replaced without disrupting the operation of the entire rack or cluster.

Racks and blades of the Vega supercomputer.

Racks and blades of the Vega supercomputer.

All nodes are interconnected through high-speed network connections, typically including an Ethernet network and sometimes an InfiniBand network (IB) for efficient communication between compute nodes. The InfiniBand network is designed for high performance and low latency, making it ideal for connecting compute nodes where fast inter-node communication plays a crucial role in distributed computing. It offers high data transfer rates (up to 400 Gbps or more) and extremely low latency, as it enables direct memory access through RDMA (Remote Direct Memory Access) protocol. Ethernet network, on the other hand, is a more universal and cost-effective solution. For this reason, Ethernet is often used to connect login nodes with compute nodes and data nodes.

Network connections between nodes of the Vega supercomputer.

Network connections between nodes of the Vega supercomputer.

Node Architecture

The vast majority of today's computers follow the Von Neumann architecture. In the processor, a control unit ensures the coordinated operation of the system, reads instructions and operands from memory and writes the results to memory. The processor executes instructions in an arithmetic logic unit (ALU), assisted by registers (e.g. tracking program flow, storing intermediate results). The memory stores data - instructions and operands, while input/output units facilitate data transfer between the processor, memory, and external devices.

For a long time, computers had a single processor or central processing unit (CPU), which became increasingly powerful over time. To meet the growing demand for processing power and energy efficiency, processors have been divided into multiple cores, which operate in parallel and share a common memory. In addition, some cores have support for parallel execution of instructions, known as hardware threads or simultaneous multithreading (hyper-threading). In modern computer clusters, compute nodes typically have multiple processor sockets. These processors, with their cores, operate in parallel and share a common memory, which is distributed among the processors to enhance efficiency. For example, many compute nodes in the NSC cluster are equipped with four tightly interconnected AMD Opteron 6376 processors, each having 16 cores, resulting in a total of 64 cores per node. Compute nodes in the Maister and Trdina clusters have 128 cores per node.

For example, compute nodes in the Vega cluster are equipped with two tightly interconnected AMD Epyc 7H12 processors, each having 64 cores, resulting in a total of 128 cores per node.

A blade of the Vega supercomputer containing three compute nodes, each with two central processing units, memory, fast solid-state drives, and an InfiniBand network connection.

A blade of the Vega supercomputer containing three compute nodes, each with two central processing units, memory, fast solid-state drives, and an InfiniBand network connection.

Computational Accelerators

Some nodes are additionally equipped with computational accelerators. Today, we have a diverse selection of accelerators such as:

- Graphics Processing Units (GPU),

- Field Programmable Gate Arrays (FPGA), and

- Quantum Computers (QC).

The primary task of graphics cards is to offload the processor when rendering graphics to the display. When rendering to the screen, they must perform numerous independent calculations for millions of screen points. Their architecture proves suitable for solving other computational problems as well. We refer to these as General Purpose Graphics Processing Units (GPGPU). They excel whenever we need to perform multiple similar calculations on large amounts of data with few dependencies. For example, in deep learning in artificial intelligence and video processing. The architecture of graphics processing units differs significantly from that of regular processors. Therefore, to efficiently execute programs on graphics processing units, existing programs must be substantially modified.

FPGA accelerators offer a high degree of flexibility and low power consumption. They are capable of processing data with low latency. Users can customize the architecture for specific problems. This programmability is achieved through a network of configurable logic cells and interconnections that enable the implementation of various digital circuits. They are frequently used for rapid prototyping and as accelerators for various applications such as neural networks, cryptographic algorithms, genomics, and much more.

Quantum computers represent an entirely new computing paradigm based on quantum bits (qubits). Unlike classical computers that use bits that can take values of 0 or 1, quantum computers use qubits that can take a superposition of both values simultaneously. This allows quantum computers to solve certain problems, such as factoring large numbers, optimization, and quantum system simulation, in ways that exceed the capabilities of classical computers. Although quantum computers are still in early stages of development, they are expected to revolutionize the field of computing in the future.

Supercomputer Power Supply

Powering computer clusters is challenging due to high reliability requirements and substantial energy consumption. Power supply reliability is crucial for critical systems operation, often requiring redundancy in power lines and the use of uninterruptible power supplies. Despite more efficient hardware, total energy consumption continues to rise due to increasing demands for computing power, storage capacity, and network bandwidth. Advances in cooling enable denser equipment, increasing consumption – currently, the world's most powerful supercomputer requires 30 MW of electrical power to operate. Slovenia's most powerful supercomputer, Vega, requires approximately 2 MW. Managing these challenges requires careful planning, an adaptable distribution network, and regular maintenance without operational interruptions.

Power management system of the Vega supercomputer.

Power management system of the Vega supercomputer.

Cooling

Cooling is a crucial part of computer cluster operation, as processor units and accelerators consume enormous amounts of energy during their work, most of which is converted to heat. Efficient heat dissipation is essential, as overheating can lead to reduced performance, hardware damage, or even complete system failure. The most common cooling methods in computer clusters include air cooling, where fans direct cool air through server cabinets, and liquid cooling, which is becoming increasingly popular due to its energy efficiency. Liquid cooling uses a coolant (usually glycol) that circulates through pipes and removes heat directly from processors or other key components. Some supercomputing centers also use immersion cooling, where entire components are submerged in thermally conductive liquid. These systems are more energy-efficient as they enable better heat transfer, and often include recycling of excess heat used for building heating or other industrial processes. Cooling of compute nodes in the VEGA cluster is supported by a liquid cooling system, while data nodes are air-cooled.

Heat dissipation system of the Vega supercomputer.

Heat dissipation system of the Vega supercomputer.