Introduction to Parallelism

What is Parallelism?

Parallelism refers to the simultaneous execution of multiple tasks by breaking down a larger problem into smaller sub-tasks that can be processed independently. Unlike sequential execution, where tasks are performed one after another, parallel computing aims to execute tasks concurrently to optimize performance and reduce computational time.

Parallelism manifests in several distinct forms:

- Task Parallelism: Different tasks or operations run simultaneously, each performing a unique function.

- Data Parallelism: A dataset is divided into smaller chunks, with each processor handling a portion of the data simultaneously.

- Pipeline Parallelism: A series of dependent operations is structured into stages, allowing overlapping execution of multiple steps.

Modern computing architectures, including multi-core processors and distributed computing systems, leverage parallelism to enhance computational capabilities. As a result, understanding how to design efficient parallel algorithms is crucial for both software developers and researchers.

Historical Context of Parallelism in Society

Parallelism is not an innovation unique to computing—it has been a fundamental aspect of human organization for centuries. Many historical advancements in labor and industry have relied on parallel execution of tasks to maximize efficiency. This section explores key historical examples that illustrate the principles of parallelism long before the advent of modern computing.

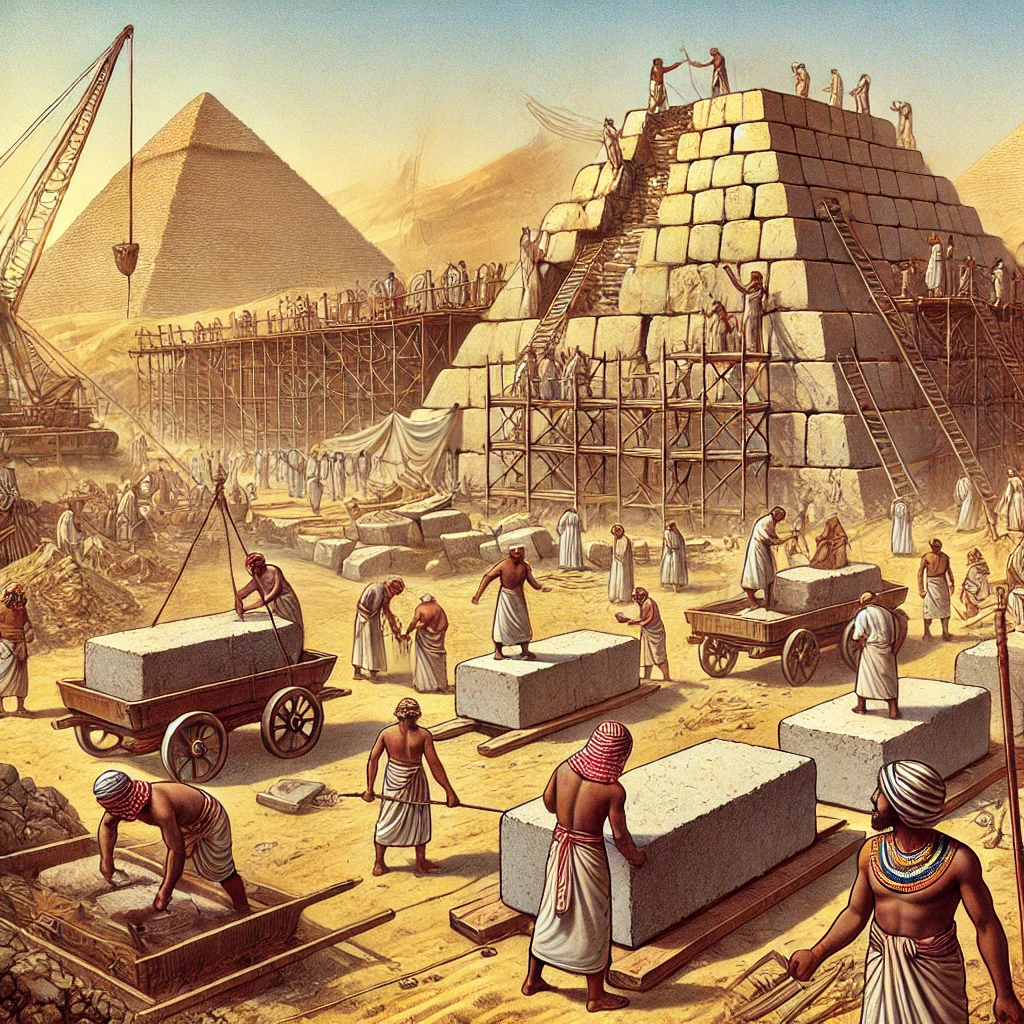

The Pyramids: A Large-Scale Parallel Endeavor

One of the most well-documented historical examples of parallelism is the construction of the Egyptian pyramids. These monumental structures required the coordinated efforts of thousands of laborers, each assigned specialized roles:

- Quarry workers extracted and shaped stone blocks.

- Transport teams moved the blocks across great distances.

- Engineers and laborers worked together to assemble the pyramid structure in an organized manner.

By dividing labor across multiple groups working in parallel, construction efforts were significantly accelerated. This approach mirrors modern parallel computing, where computational workloads are split among multiple processors to optimize task execution.

Agricultural Parallelism: Labor Division in Farming

Agriculture has long depended on parallel execution of tasks to enhance productivity. Prior to mechanization, large-scale farming operations relied on distributed labor to perform essential tasks concurrently:

- Some workers plowed the fields while others planted seeds.

- Harvesting teams worked in parallel to collect crops efficiently.

- Irrigation and maintenance teams ensured the sustainability of the farmland.

With the advent of mechanized farming, the scale of parallelism increased exponentially. Modern agriculture employs fleets of autonomous machines, such as harvesters and drones, which operate concurrently to maximize efficiency—much like how high-performance computing systems manage vast computational workloads.

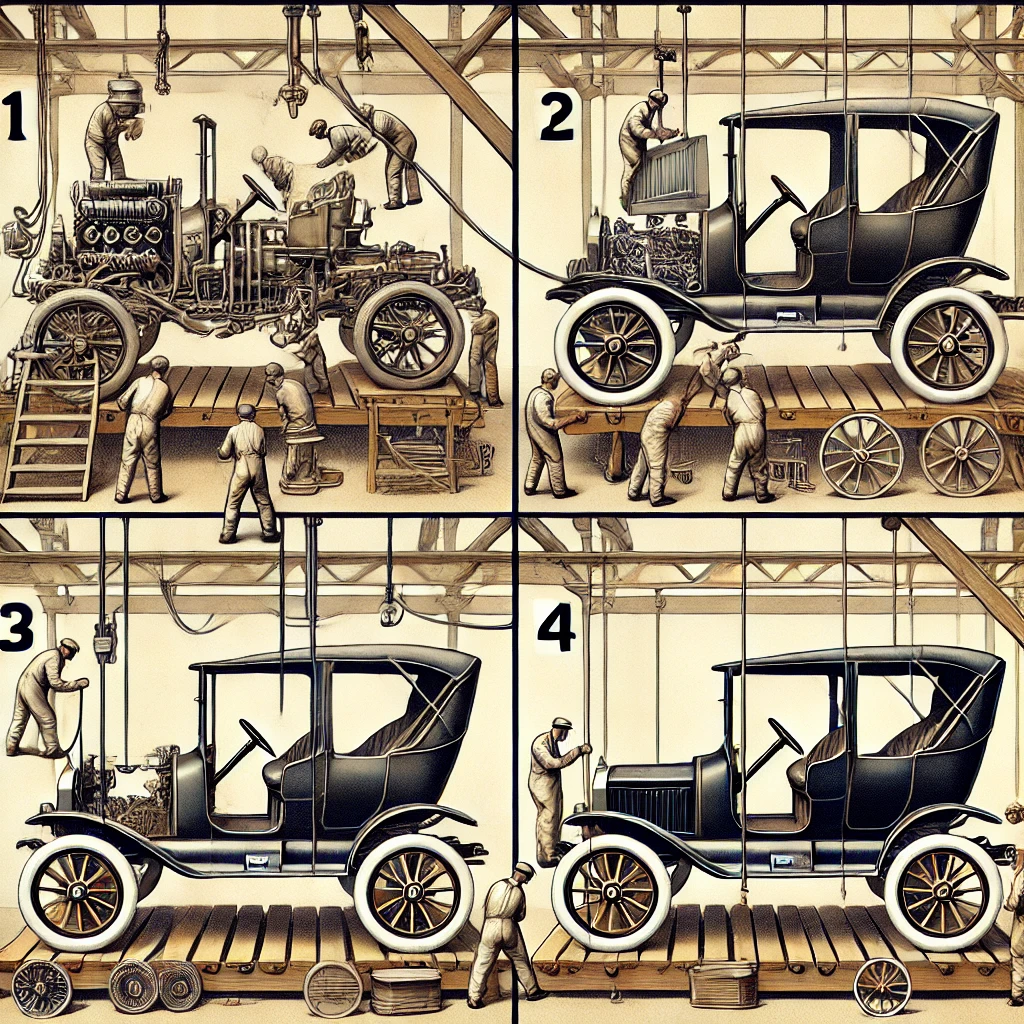

Industrial Revolution and the Assembly Line: Pipeline Parallelism

The principles of parallelism were further refined during the Industrial Revolution, particularly with Henry Ford’s introduction of the assembly line in manufacturing. The assembly line is an exemplary case of pipeline parallelism, where a production process is divided into distinct stages:

- One worker assembles a specific component.

- The semi-finished product moves to the next station, where another worker adds further elements.

- Multiple stages are executed in parallel, reducing overall production time.

This transformation led to unprecedented efficiency gains in manufacturing and remains a cornerstone of modern industrial production. In computing, similar pipeline techniques are used in processor architectures, where multiple instruction stages (fetch, decode, execute) run concurrently to improve processing speed.

Modern Industry: Automation and Parallel Execution

Today, parallelism is deeply embedded in industrial automation. Robotic assembly lines, automated quality control, and logistics networks rely on parallel execution to streamline operations. Examples include:

- Automated car manufacturing: Multiple robotic arms perform welding, painting, and assembly tasks concurrently.

- Parallel logistics networks: Supply chain operations involve simultaneous processing of shipments across different regions.

- Smart factories: AI-driven automation optimizes production lines by dynamically allocating tasks to different robotic units.

These advancements closely parallel the architecture of supercomputing systems, where tasks are distributed among numerous computational units to achieve high efficiency.

Conclusion: The Foundations of Parallelism in Computing

From ancient construction projects to modern industrial automation, parallelism has consistently been leveraged to enhance efficiency and productivity. These historical examples provide a natural framework for understanding parallel computing, which applies the same principles to processing vast amounts of data and solving complex scientific problems.

As we transition from these real-world instances to computational models, we will explore how supercomputing harnesses parallelism to address some of the most demanding challenges in science, engineering, and artificial intelligence.