Heterogeneous systems1

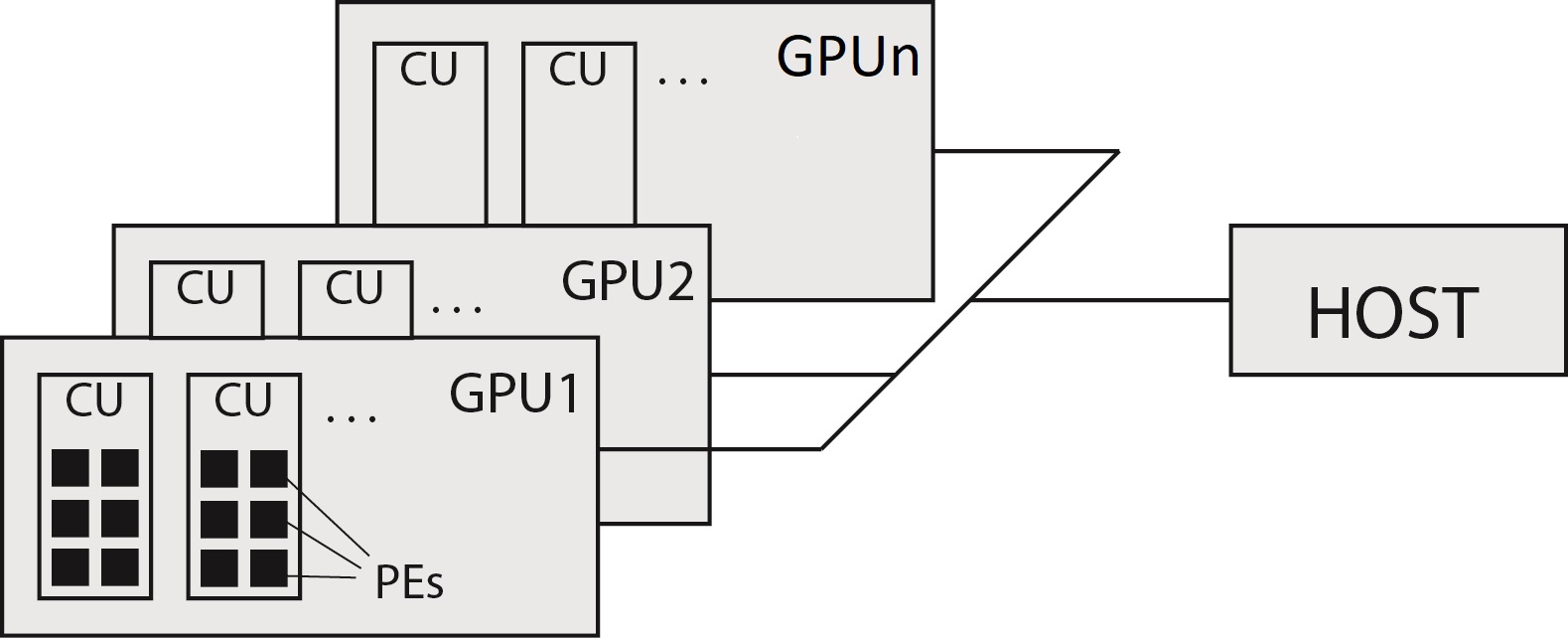

In the earliest days, computers contained only central processing units (CPUs) designed to run general programs. However, in the last decade, computers have been including other processing elements called accelerators. A heterogeneous computer system is a system that contains one or more different accelerators in addition to the classic central processing unit (CPU) and memory. Accelerators are computing units that have their process elements and memory and are connected to the central processing unit and main memory via a fast bus. A computer with accelerators and the CPU and main memory is called a host. The figure below shows an example of a general heterogeneous system.

There exist several accelerators nowadays (e.g., graphics processing units, filed-programmable gate arrays, etc.). The most commonly used are graphics processing units (GPUs), originally designed to perform specialized graphics computations in parallel. Over time, GPUs have become more powerful and more generalized, enabling them to be applied to general-purpose parallel computing tasks with excellent performance and high power efficiency. GPU accelerators have many dedicated processing units and dedicated memory. Their processing units are usually adapted to specific problems (for example, performing many array multiplications). They can solve these problems reasonably fast (certainly much faster than CPUs). In a heterogeneous computer system, GPU accelerators are referred to as devices.

Devices process data in their memory (and their address space) and, in principle, do not have direct access to the main memory on the host. On the other hand, the host can access the memory on the accelerators but cannot address their memory directly. Instead, it accesses the memories only through unique interfaces that transfer data via the bus between the device memory and the host's main memory.

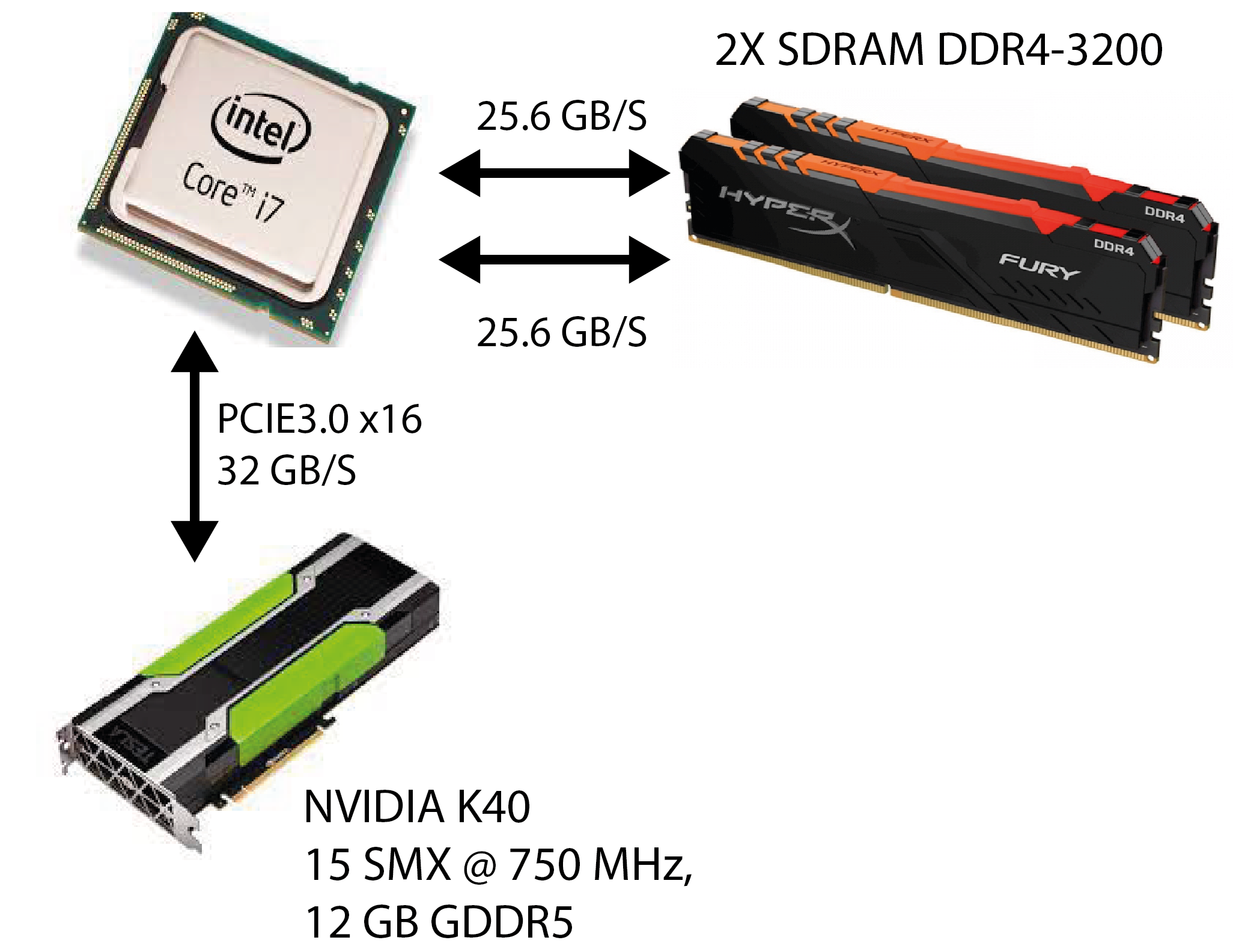

The figure below shows the organization of a heterogeneous system that is present in a personal computer. The host is a personal desktop computer with an Intel i7 processor. CPUs and GPUs are discrete processing components connected by the PCI-Express bus within a single compute node. The GPU is not a standalone platform but a co-processor to the CPU.

The host's main memory is in DDR4 DIMMs and is connected to the CPU via a memory controller and a two-channel bus. The CPU can access this memory (for example, via LOAD / STORE instructions) and store instructions and data in it. The maximum theoretical transfer rate per channel between the DIMM and the CPU is 25.6 GB/s. The accelerator in this heterogeneous system is the Nvidia K40 graphics processing unit. It has many processing units (we will get to know them below) and its own memory (in this case, it has 12 GB of GDDR5 memory). Memory on the GPU can be accessed by processing units present on the GPU but cannot be accessed by the CPU on the host. Also, processing units on the GPU cannot access the main memory on the host. The GPU (device) is connected to the CPU (host) via a high-speed 16-channel PCIe 3.0 bus, allowing data transfer at a maximum of 32 GB/s. The CPU can only transfer data between the main memory on the host and the GPE memory via special interfaces.

Homogeneous vs Heterogeneous

A homogeneous system uses one or more processors of the same architecture (e.g., Intel) to execute an application. A heterogeneous system instead uses a suite of processor architectures to execute an application, applying particular tasks to architectures to which they are well-suited, yielding performance improvement.

Programming heterogeneous systems

The goal of heterogeneous computing is not to replace CPU computing with GPU computing. Each approach has advantages for certain kinds of computational problems. For example, parts of computationally intensive applications often exhibit a rich amount of data parallelism. GPUs are used to accelerate the execution of this portion of data parallelism. CPU computing is good for control-intensive tasks, and GPU computing is good for data-parallel computation-intensive tasks. Suppose a program has a small data size, sophisticated control logic, and low-level parallelism. In that case, the CPU is a good choice because of its ability to handle complex logic and instruction-level parallelism. Instead, suppose the program processes a vast amount of data and exhibits massive data parallelism. In that case, the GPU is the right choice because it has many processing elements suitable for data-parallel tasks.

Therefore, for optimal performance, we need to use both CPU and GPU for our application, executing the sequential parts on the CPU and intensive data-parallel parts on the GPU. Writing code this way ensures that the characteristics of the GPU and CPU complement each other, leading to full utilization of the computational power of the heterogeneous system. Therefore, a heterogeneous application consists of two parts:

- Host code, and

- Device code.

Host code

The host code runs on CPUs. The CPU typically initializes an application executing on a heterogeneous platform. The host code is responsible for managing the environment, code, and data for the device before offloading compute-intensive tasks on the device.

We will implement the host code in the C programming language during the workshop. The host code is an ordinary program contained in the C function main (). The tasks of the host code are to:

- determine what devices are in the system,

- prepare the necessary data structures for the selected device,

- transfer data to the device,

- transfer the translated device code to the device and launch it,

- transfer data from the device upon completion of the device code.

Device code

The device code runs on GPUs. To support joint CPU and GPU execution of an application on heterogeneous systems, NVIDIA designed a programming model called CUDA. We will implement programs for devices in the CUDA C language. We will see that this is the C language with some additional functionalities. Programs written for the device are first compiled on the host. Then the host transfers them to the device (along with the data and arguments), where they are executed. The program running on the device is referred to as a kernel. Kernels run in parallel - a large number of processing units on the device execute the very same kernel.

-

© Patricio Bulić, University of Ljubljana, Faculty of Computer and Information Science. The material is published under license CC BY-NC-SA 4.0. ↩